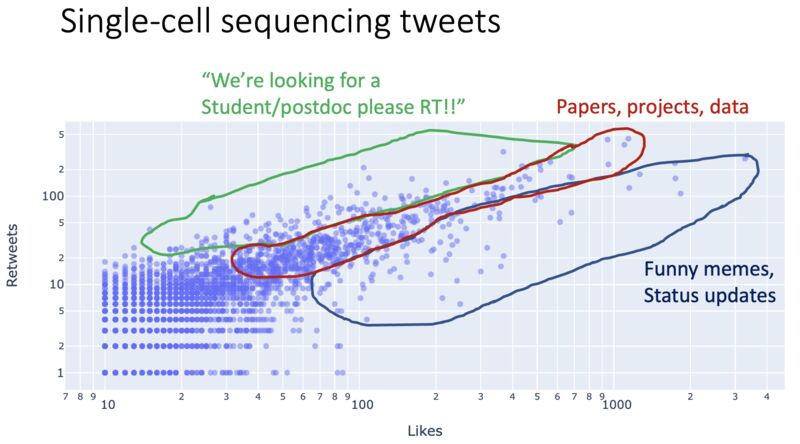

Social media posts

I meant what I said and I said what I meant. An elephant's faithful one-hundred percent!

Dr. Seuss, Horton Hatches the Egg

Table of Contents

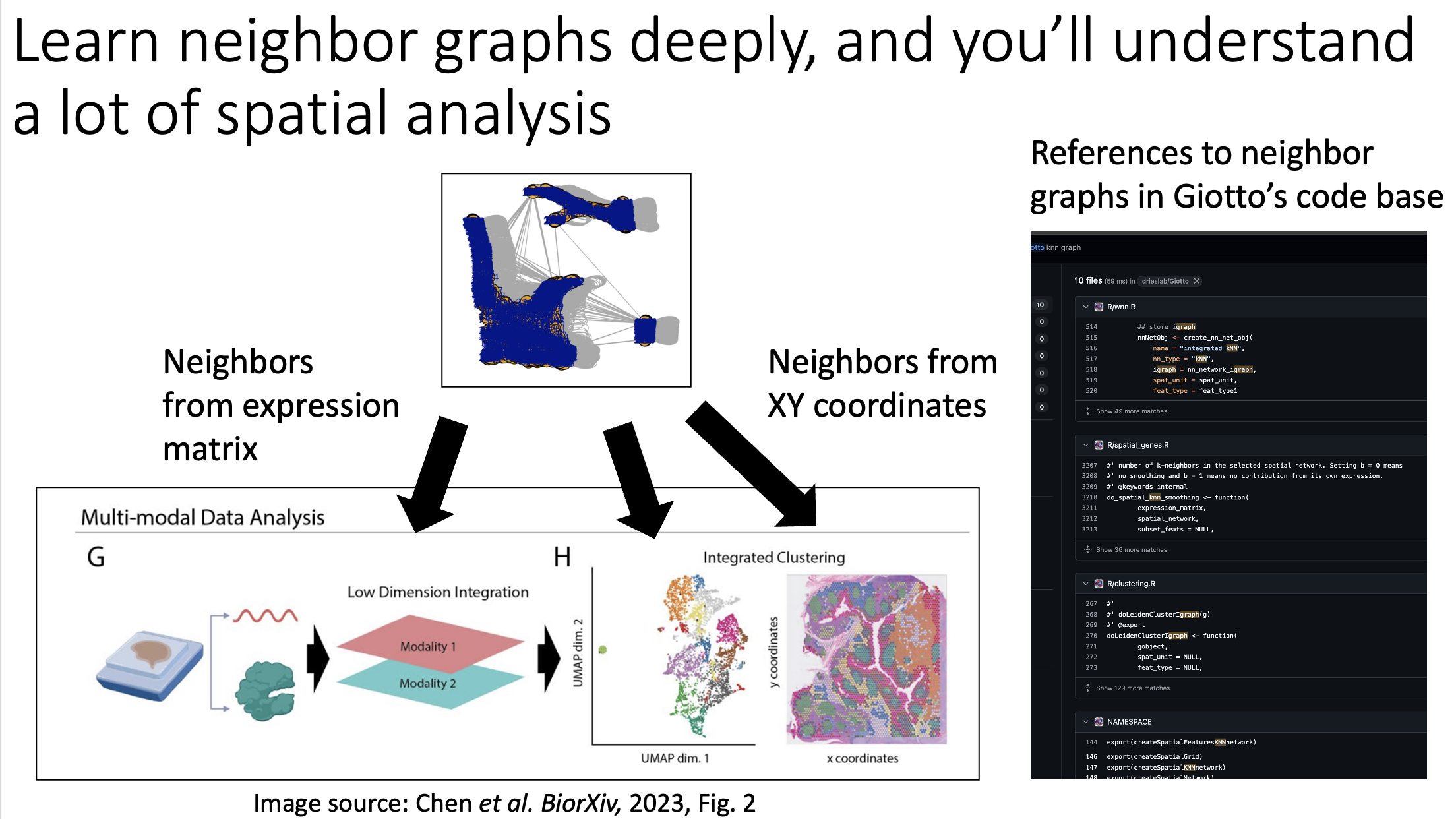

KNN sleepwalk and related

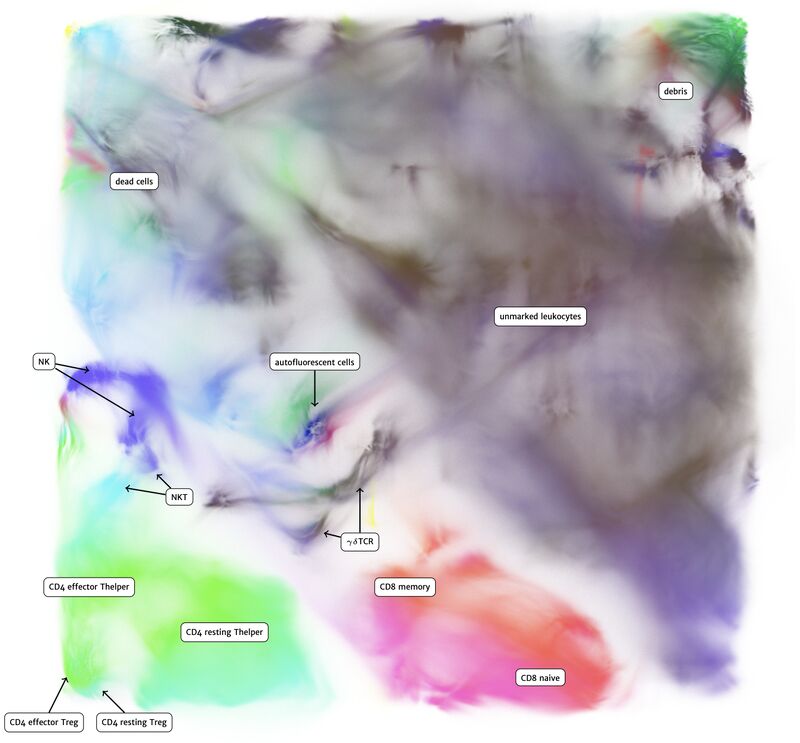

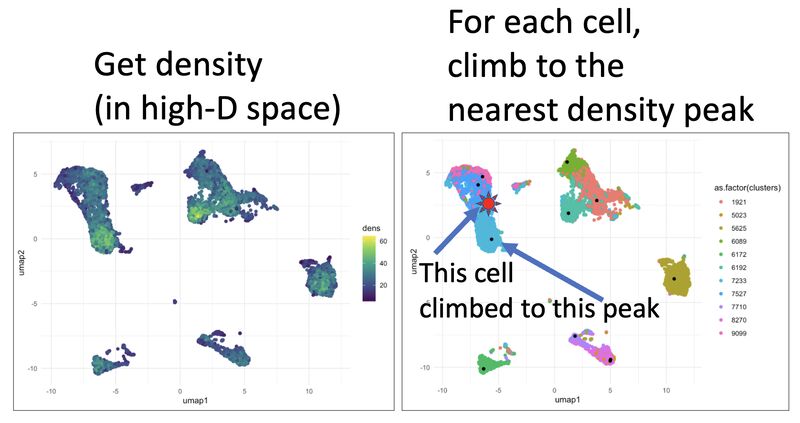

A lot of my social media content has revolved around a tool I build called KNN sleepwalk, which allows you to look at the difference between K-nearest neighbors (KNN) of a given data point in the embedding space versus the original high-dimensional space. This kind of intuition is important especially in high-dimensional flow/CyTOF data, where there is sometimes temptation to gate directly on the embedding itself. These posts show you that one should exercise caution when doing such a thing. You can use the method here.

KNN Sleepwalk Dash app

Hi friends, I finally (after several years) turned my KnnSleepwalk package into a web app that anyone can use with a single click. This will give you intuition as to what "resolution" your embeddings (e.g. UMAP) are for each region of each dataset.

Backstory:

KnnSleepwalk was an answer I had to a question posed to me in the fall of 2017: "yes, but how precise are those (t-SNE at the time) maps actually?" Since the All Of Us dataset issues in early 2024, this question has become mainstream, and has led to many shouting matches online.

How I solved it:

My solution was to visualize the K-nearest neighborhoods (KNN) of the embedding space (gif, left side) and compare them to the KNN of the original feature space, as visualized on the embedding (gif, right side). You can see that the resolution is often not that great, and this differs depending on where you are on the map.

What's new here:

The package was originally a "hack" of the distance matrix and coloring scheme of the brilliant "sleepwalk" app developed by Anders Biostat that I became aware of in the late 2010s.

Here, I re-wrote the whole thing from scratch in python and JavaScript, which allowed me to turn it into a Dash app. This in turn allowed me to host it on Plotly Cloud, which gives all of you access in a single click.

How to use:

The local version is available via GitHub, which I link in the comments. This allows you to scale up to large (100k+ cells) datasets. The online version is good to 50k cells, and you will have the option to subsample your dataset accordingly. While this is not ideal, it does give you the intuition that my program is intended to do.

What you need: csv files of your original feature space (e.g. surface markers for flow/CyTOF, top n PCs if single-cell sequencing). Upload them to the app and run it. If you press the "run" button without any dataset uploaded, it will run it on an example 1000 cell CyTOF dataset.

This is a version 0. I am probably posting this too early, but I am so excited that this actually works that I'm just giving it out now (there were tons of revisions to make it functional beyond a few thousand cells). So if you run into any issues, just send me a DM. Or a pull request.

I have a very talented bioengineering intern (Arianna Aalami) till the end of the month, so the more iterations we can do now, the better.

Questions, comments, or feature requests? Just comment below or send a DM.

Thanks, and I hope you all have a great day.

comment

Web app is here: https://knn-sleepwalk-dash-app.plotly.app/ GitHub repo is here: https://github.com/tjburns08/knn_sleepwalk_dash_app

If you want to talk further, book a call (see link under my profile tab).

KNN Sleepwalk for spatial: Xenium

Below is a quick way to get hover-linked interaction between UMAP coordinates (or similar) and XY coordinates for your spatial data to see patterns that you may have missed.

What this is:

The image below is a 10x Xenium brain dataset. The left side is a UMAP from the expression data. The right side is the XY coordinates.

With this tool, you hover your cursor over the UMAP and it lights up the corresponding XY coordinates for those cells, changing in real time. This allows you to find subtleties that you might otherwise miss at the cluster level.

You can use this today. Just go to my KnnSleepwalk package (GitHub link in comments) and run the BiaxialSleepwalk function.

The bigger picture:

Based on recent conversations with spatial experts, we need more hover-based interactive data analysis in our pipelines. The Vitessce package shows what's possible, but it does one-to-one linking rather than neighborhood-to-neighborhood linking, which makes it harder to see patterns (example in comments).

DM me or comment if:

- You're good with interactive data frameworks (e.g. d3.js) especially those which allow for GPU acceleration (anything involving WebGL, like regl-scatterplot).

- You have ideas/feature requests.

- You want help implementing this type of infrastructure into your workflows.

Thank you and I hope you all have a great day.

comment

My KnnSleepwalk package is here: https://github.com/tjburns08/KnnSleepwalk. You'll want to use the function BiaxialSleepwalk for this one.

Vitessce example of umap-to-image correspondance without nearest neighbor functionality: https://vitessce.io/#?dataset=marshall-2022

KNN Sleepwalk for spatial: IMC

You can now use my KnnSleepwalk package to interrogate your spatial data. KnnSleepwalk allows you to interact with your data simply by hovering your cursor over the visualizations. The gif below shows an Imaging Mass Cytometry cancer dataset.

Left: a biaxial with CDH1 (E-Cadherin) on the x axis and CD68 on the y axis. Right: the spatial coordinates of each cell.

Simply dragging the cursor shows you spatial differences that you might have missed if you were simply manually gating on the left, or relying on a color palette on the right.

If you work with spatial data: what UI/UX features would you want to see? The feedback I've already gotten is that this needs to work with very, very large datasets.

If you are leading a team: let's talk strategy. Tools like this could save you lots of time and provide more clarity in each decision cycle.

The big picture: based on the reception this tool and similar of mine have gotten in the past few years, there is an unmet need around interactive hover-and-see tools in single-cell and spatial. We need to add this kind of functionality to our UI/UX's.

The link to the package is in the comments. Thank you and I hope you all have a great day.

comment

Link to KnnSleepwalk: https://github.com/tjburns08/KnnSleepwalk Link to virtual office hours: https://calendly.com/burnslsc-info/30min

Also: I'm collecting public spatial datasets, and it's not straightforward. Any help here would be appreciated.

KNN Sleepwalk colored by ranks

UMAP shuffles nearest neighbor rank. Check out David Novak's update to my KNN Sleepwalk package, which shows this.

Quick review of KNN Sleepwalk:

- Purpose: determine how well nonlinear dimensionality reduction tools preserve a cell's nearest neighbors from the original feature space, cell by cell.

- Inputs: original feature matrix (markers if flow/CyTOF, top n PCs if single-cell sequencing).

- Output: an interactive map that runs in the browser. Hover your cursor over a cell and it highlights its neighbors in embedding space, and neighbors in original feature space (often quite different).

What we see in this version:

- The rank of nearest neighbors (closest, second closest, etc) is not preserved, at least in this example of UMAP with CyTOF data.

What this means for users:

- In general, I would discourage subsetting/gating directly in UMAP space

- If you have to do this, I would not trust any distinctions made within islands, unless they are made in the original feature space (e.g. Naive vs Memory CD4 in the PBMC 3k dataset, if you know what I'm talking about).

What I am pushing for:

- Native plugins in FlowJo, Cytobank, OMIQ, Seurat, and whichever other tools people are using to analyze their flow/CyTOF/single-cell data.

- More "interpretability" work for the tools that we regularly use. For inspiration, look at what Anthropic is doing to try to understand its LLMs (link in comments).

Next step:

If you do single-cell analysis, use my package (link in comments). If you are a bioinformatics tool builder or researcher, look into interpretability work. If you have any feedback, comment, DM me, or sign up for my online office hours.

comment

KNN Sleepwalk can be found here: https://github.com/tjburns08/KnnSleepwalk Anthropic's interpretability work can be found here: https://www.anthropic.com/research/tracing-thoughts-language-model My office hours: https://calendly.com/burnslsc-info/30min

Original KNN sleepwalk reveal

Do you need quick and easy intuition around how exact your single-cell embeddings are? Check out knn_sleepwalk, a wrapper I wrote around the sleepwalk R package. Hover the cursor over any cell in your embedding, and it will show you the cell's k-nearest neighbors computed from the original feature space (as opposed to the embedding space). Below is a UMAP of 10,000 cells in CyTOF data with a k of 100. Note that the neighbors are not always nearby. Be careful if you want to gate/cluster on the embedding! https://lnkd.in/eeqRBdSn

KNN sleepwalk: Biaxial-UMAP interface

Flow/CyTOF users and leaders: have you ever wanted to know exactly where a cell on a biaxial plot is on a corresponding UMAP and vice versa? I built a tool just for you:

Below is my KNN Sleepwalk tool adapted to compare any plot with any plot. The k-nearest neighbors (KNN) of a given cell are computed in the plot on the left, and the corresponding cells are visualized in the plot on the right.

Here, we have a CyTOF whole blood dataset. A CD3 x CD19 biaxial plot is the "root" plot, from which the KNN are computed. The plot on the right is a UMAP, and the corresponding cells are being visualized directly on it.

Having an interface like this is one way (of many) to prevent biologists from over-interpreting their dimensionality reduction plots. Thus, I hope that down the line, this biaxial-UMAP real time functionality is available for anyone doing any sort of high-dimensional flow analysis, whether you're doing manual gating or exploratory data analysis.

Note that we are just looking at a biaxial vs UMAP. We can do anything vs anything. This includes biaxial vs biaxial. Note also that we can compare a "root" plot to multiple plots in real time.

Credit to S. Ovchinnikova and S. Anders for developing Sleepwalk (link in comments), from which I have built these additional functionalities and use cases.

I am still building this thing out, so if you have any particular feature requests, please comment or DM me. This tool is for you. Bioinformaticians who are interested in helping out, please DM me. I hope you have a great day.

KNN sleepwalk: Two UMAPs in light of All of Us research program controversy

In light of recent scrutiny around UMAP, coming from its controversial use in the All of Us Research Program, I refactored my KNN Sleepwalk project (which I started a year ago) to better reflect the limits of UMAP. Let me explain:

This is the PBMC 3k dataset (2700 cells), which is a flagship single-cell sequencing dataset. To the left, hovering the cursor over each cell gives you the top 1% nearest neighbors (27) of that cell in UMAP space. To the right, you can see the 27 nearest neighbors of that same cell calculated from the first 10 principal components, from which you do the clustering and dimension reduction in single-cell sequencing (you can think of it as making the data flow/CyTOF-like, and then doing flow/CyTOF-like analysis on it).

You will notice that the nearest neighbors in high-dimensional space are often quite far from the cell in question, speaking to the precision of the map itself. This is worth thinking about when you're looking at the clusters you've made on the map, or thinking about gating on the map directly.

The bigger picture here is that I'm getting UMAP to talk about itself…to tell me its own limits. This is one way you can better understand what a model can and cannot do. I encourage everyone using UMAP or any complex visualization to do similar things with it. Scientists, PIs, and leaders: please make sure you have a healthy dose of skepticism around tools like these. They can be useful, but they can also be misinterpreted or over-interpreted.

Kudos to Svetlana Ovchinnikova and Simon Anders of Center for Molecular Biology of the University of Heidelberg for developing Sleepwalk, which I re-purposed here to visualize the K-nearest neighbors (they developed it to visualize distances). Link in the comments, along with my re-working of it so you can do this on your own work.

If you have questions about UMAP or similar tools, or just want to vent, please feel free to comment or DM me.

KFN sleepwalk, two UMAPs

One way to understand how much global information UMAP can (and cannot) preserve: look at the K-farthest neighbors (KFN) of cells in UMAP space versus high-dimensional space. Here is what I mean:

Below is a UMAP from the flagship "PBMC 3k" single-cell RNA sequencing dataset, with 2700 cells. I am using my modification of Sleepwalk (by S. Ovchinnikova and S. Anders, link in comments) to highlight the top 10% farthest neighbors (270) for each cell the cursor is on. This is what is meant by KFN. Left side is the KFN of UMAP space, right side is the KFN of the first 10 principal components, from which you do the clustering and dimension reduction in single-cell sequencing.

The first thing to notice is that the KFN in UMAP space and high-dimensional space look nothing like each other, pointing to limitations in UMAP's ability to preserve global information.

The second thing to notice is that there is information that is just hard to capture in 2 dimensions. In particular, there is a region to the middle right of the UMAP that seems to be the farthest away from the majority of the dataset, including cells that are quite nearby in UMAP space. One way to make sense of this is to imagine a third dimension where the cells are pointing outward and far away from the rest of the data. But note that in reality we're dealing with 8 extra dimensions here, not 1 extra dimension. Thus, there will be all kinds of complexity at the global level that is hard to capture in 2 dimensions.

UMAP claims to capture global structure better than t-SNE, and this topic is a rabbit hole once you start looking at initialization steps for the respective tools. But the point is that global structure is very complex, so even if a tool does a better job than another tool at capturing global structure in 2 dimensions, it doesn't mean that it's perfect. Or anywhere near perfect. Don't let claims like these bias you, as they initially biased me.

This post is a followup to my previous "KNN sleepwalk" post, where I compare the K-nearest neighbors of UMAP space versus high-dimensional space directly on the UMAP. If you missed that, please go to the link in the comments.

If you want to use this KFN (and the respective KNN) sleepwalk tool for your data and work, please go to the project's GitHub, which I will also link in the comments. If you want me to walk you through its use, just send me a direct message. Thank you and I hope you all have a great day.

KFN sleepwalk, t-SNE and UMAP

As requested, here are the k-farthest neighbors of a CyTOF dataset side-by-side between t-SNE and UMAP. The cell the cursor is on within the UMAP will map to the corresponding cell on the t-SNE map. Note that they're also all over the place on UMAP as well. Case in point: just because it's UMAP doesn't mean the arbitrary island placement has been solved.

But again, don't take my word for it. Use the tool and analyze your data here: https://lnkd.in/eeqRBdSn. For some helpful slides, go here: https://lnkd.in/eivsbAfE

KFN sleepwalk, t-SNE

The k-farthest neighbors of a CyTOF dataset, visualized on a t-SNE map, are all over the place. Why? Because t-SNE isn't optimized to capture global information. The position of the islands relative to each other doesn't mean much. Keep that in mind when interpreting these embeddings. To run this on your own data, for whatever embedding algorithms you're doing, visit my knnsleepwalk project here: https://lnkd.in/eeqRBdSn

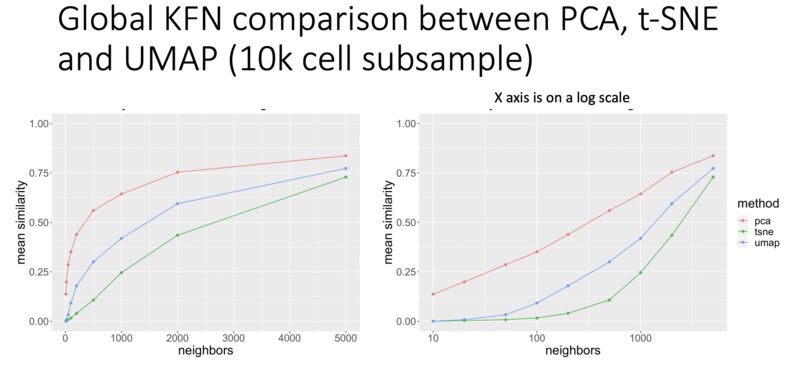

KFN overlap as a metric for evaluating global preservation for embeddings

Here's an interesting metric I developed to get at global structure preservation of high-dimensional data in a low-dimensional embedding: k-farthest neighbor overlap between high-d and embedding space. Result (in CyTOF data, so far): PCA is better than UMAP. UMAP is better than t-SNE. From my talk here: https://lnkd.in/eivsbAfE

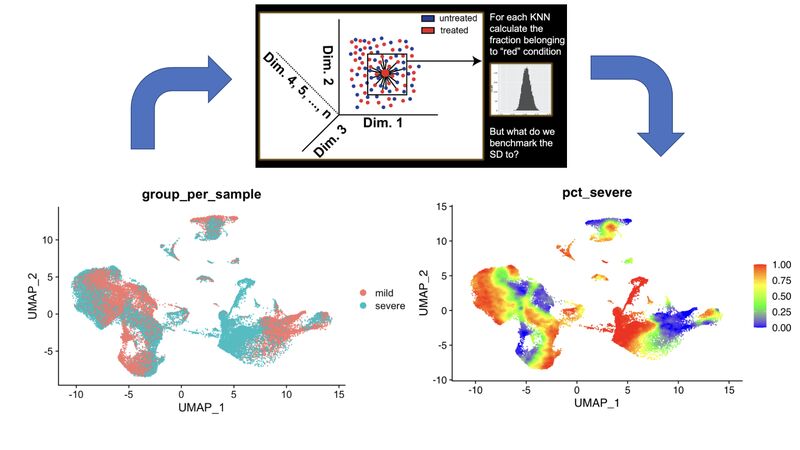

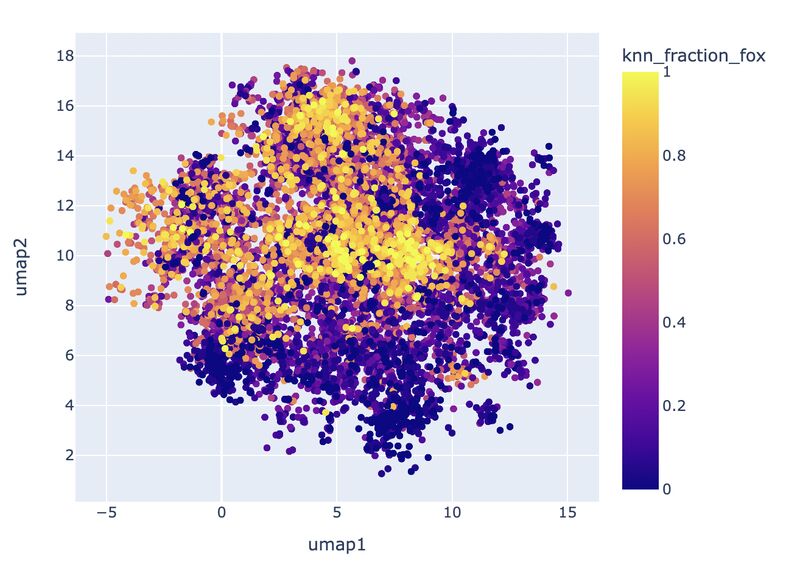

A KNN based solution to viewing data on a UMAP where one condition is "sitting on top of" the other

In my single-cell sequencing work, I sometimes come across visualizations where there are two conditions stacked onto a UMAP in two respective colors, where one is very much behind the other, making it of limited use.

A solution to this problem comes out of my thesis work on CyTOF data. Compute the k-nearest neighbors (KNN) of each cell, and then color the map by KNN percent belonging to condition 1. I have a pre-print and a BioConductor package around this, but in reality you just need a few lines of code, which I provide here: https://lnkd.in/eKkYub7b. Just CTRL+F for "RANN."

If you want a more in-depth look at this KNN-based solution and things you can do with it, go here: https://lnkd.in/eJYTj5s5

UMAP and t-SNE manipulation animations

Here, I ask various questions around the nature of t-SNE and UMAP, which are often well answered by manipulating the input and examining the output.

Following a cell's position across multiple t-SNE and UMAP runs

If you run t-SNE or UMAP multiple times, you can see the maps change. To properly use these tools, you need to run them more than once. Let me explain.

I ran 100 t-SNEs and 100 UMAPs on the same CyTOF dataset (Samusik bone marrow, 10,000 cells), tracking the position of a single cell across runs.

Here's what happened:

t-SNE: The cell formed a diffuse ring across runs, showing many plausible placements.

UMAP: The cell jumped between two distinct regions, showing more constraint.

Why this matters:

t-SNE has a very large solution space. The tool optimizes for local neighborhood structure, so the global structure can shift dramatically.

UMAP appears to be tighter, but still not deterministic.

Visual islands are stable (monocytes will have their own "island" throughout runs), but the total layout isn’t.

Key takeaway for researchers and team leads:

Run your dimensionality reduction multiple times.

Compare not just what islands form, but whether and how relative positions between islands change. Look for patterns that survive the shift.

As I've talked about in previous posts, t-SNE and UMAP are useful in terms of seeing the "forest in the trees," but they should not be taken as ground truth (I'll link some of my relevant work in the comments).

In future research: I’ll look at how relative island positioning changes when we control for global flips and rotations (something that you see a bit in the gifs below).

Seen weird variability in other tools? Leave a comment. I’d love to learn from your observations too.

I hope you all have a great day.

![]()

comment

A webinar I gave on the limits of dimensionality reduction analysis: https://watershed.bio/resources/the-limits-of-dimensionality-reduction-tools-for-single-cell-analysis

My KnnSleepwalk tool, which you all should use: https://github.com/tjburns08/KnnSleepwalk

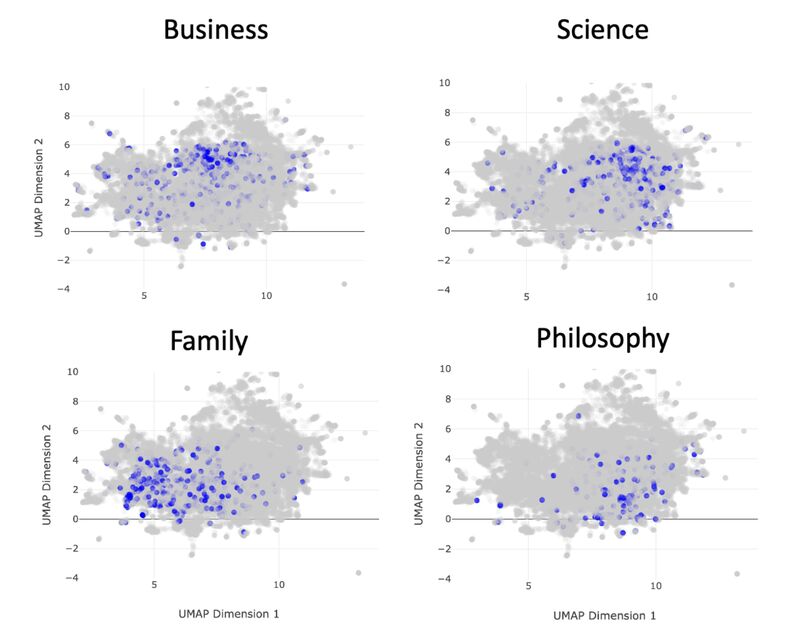

t-SNE and UMAP exist on a spectrum

In reviewing the recent "Seeing data as t-SNE and UMAP do" paper, I found out that t-SNE and UMAP are on a spectrum. Let me explain:

The Berens Lab at Univesity of Tübingen, Germany developed a method called Contrastive Neighbor Embeddings (link in comments) that generalizes nonlinear dimensionality reduction algorithms on a spectrum between more local preservation (t-SNE like) to more global preservation (UMAP like).

Thus, rather than running t-SNE or UMAP, and so on, one can sample embeddings from the whole spectrum, which can be obtained by adjusting a particular tuning parameter. Accordingly, users can look at a handful of images across the spectrum and choose the right one.

The gif attached to this post is the flagship Samusik mouse bone marrow CyTOF dataset (technically Nikolay Samusik's analysis of Matt Spitzer's data) from the X-shift paper, that I ran through the t-SNE to UMAP spectrum tool.

While I have spent a lot of time focused on analyzing the preservation of local structure (the KNN preservation work you've seen from me), getting a feel for the global preservation is important, too, especially in datasets like this one where there are developmental trajectories.

In my experience, and also reported by the Berens Lab, there is a tradeoff between local and global preservation for these types of embeddings (KNN graph based), which makes it all the more important to have the whole spectrum in front of you.

I provide the code (in the comments) to make these images and gifs, and I encourage everyone to use this tool as well, rather than simply choosing t-SNE or UMAP or whatever is trendy and sticking with it. The more of the spectrum you see, the better intuition you'll get around the data.

Gif of running t-SNE over and over, ordered by image similarity

As requested, here are 100 t-SNE runs in a row for CyTOF data ordered by image similarity. Notice that there are pockets of stability in the island placement. It's not completely random, as it appeared in the previous post. I would not have realized this had I not done this extra ordering step.

How I did it: I took every plot image and made a pairwise image distance matrix using root mean square error as a metric. I then clustered the matrix as you would when viewing it as a heatmap. I then took the row names of the clustered matrix and set that as the new order for making the gif.

Gif of progressively adding noisy dimensions to t-SNE

If you have one or two bad markers in your panel (noise), does it completely ruin your t-SNE/UMAP visualizations? According to my analysis so far, no. I take whole blood CyTOF data (22 dimensions) and add extra dimensions of random normal distributions, running t-SNE after each new column has been added (I've done UMAP too). What I have found:

- A few dimensions of noise do not catastrophically affect the map. Lots of noise dimensions do.

- The embedding space shrinks with increased number of dimensions. You have to hold the xy ranges constant to see this.

- When you have many dimensions of noise, the map starts to look trajectory-like (look at the end of the gif), which could affect biological interpretation.

Gif of running t-SNE and UMAP over and over

Run t-SNE and UMAP on CyTOF data 100 times in a row. How much does the island placement for each map vary from the previous one? Notice that UMAP is quite a bit more stable. This could be the initialization, or the optimization function of UMAP, which has a "push distant cells away" component.

Gif of progressively adding noisy dimensions to UMAP

UMAP on noisy non-trajectory data looks like a trajectory. I add one noisy dimension to whole blood CyTOF data, run UMAP, add another noise dimension, run UMAP again, etc. The map starts to look like a trajectory around 30 added noisy dimensions (biologically, it's not a trajectory at all).

If you're looking at a UMAP of an unfamiliar biological dataset (eg. new technology), and it looks like a trajectory, be careful with the biological interpretation. It could just be noise.

Use my code and try it on your data here: https://lnkd.in/eD29nQaw

A relevant article I wrote on the Beauty is Truth Delusion that will get you in the right mindset: https://lnkd.in/ezeZV_Fj

A relevant interrogation of dimension reduction with lots of pictures here: https://lnkd.in/eivsbAfE

Teaching and learning bioinformatics

Some of my work involves teaching bioinformatics, especially to biologists who are currently learning. I am good at this in particular because I started out as a biologist and learned bioinformatics later in life. The posts here are reflections and insights in this direction.

Scanpy analysis intuition building app

Turn the knobs, see how the output changes.

When you are analyzing single-cell and spatial data, you can gain intuition by changing the parameters of the pipeline, and seeing how the output changes. To help you to this end, we made a small app that allows you to change some of the critical settings in a given pipeline, and see how that affects downstream output.

The app runs the PBMC 3k dataset through a standard analysis pipeline via scanpy. The UI allows you to change the dataset, number of highly variable genes, number of PCs, and number of KNN for the UMAP. The output is a UMAP that is colored by the pre-made cell annotations. You can therefore look at how the shape of the UMAP changes, and how distinguished the annotations are (in optimal circumstances, they should be maximally separate).

One observation we made is that for this dataset, changing the number of highly variable genes produced modest visual changes, but not as substantial as I previously thought (with the exception of going down to a very small number, like 100). Knowing this kind of thing is important, per dataset. For example, it could be that for other data types going from 2000 to 5000 HVGs would produce very different results.

You have to try it yourself in order to see for yourself, and that's what this app is all about.

This is a "version 0" and there are many things that we would like to add down the line. One of them is the ability to drag and drop in your own dataset. This is a low hanging fruit. Things like coloring by cluster ID, with the choice of various clustering algorithms and their respective settings (e.g. Louvain, Leiden, k-means).

Additional downstream metrics would do well too, like how good the UMAP is (via our KnnSleepwalk tool), or things like a F1 score between pre-made cell annotations and new clusters. Later apps could tackle things like testing data integration methods across two flagship datasets.

To use the app, you simply have to clone the github repo, pip install the requirements.txt file, and run the "app" python script. This will produce a link you can open in your browser, and you are good to go.

We are currently in the process of building out front-end interfaces to the work we have done in the past and the work we are currently doing. We think that this will make our work more accessible and usable to a larger audience with fewer steps.

If you are a member of the single-cell and/or spatial community and have any requests for custom-tailored apps (or extensions of apps, modules I can build into existing SaaS products, etc, please let me know.

Link to the app is in the comments below. Credit to Arianna Aalami for fleshing out a lot of the code. Thank you, and I hope you all have a great day.

comment

The GitHub link to the project is here: https://github.com/ariannaaalami/interactive_scanpy_pipeline

I managed to host it on Plotly Cloud (I'm an early access member), though the link has been currently a bit wishy washy. I'll link it nonetheless: https://interactive-scanpy-pipeline.plotly.app/

How I went from biologist to biology-leveraged bioinformatician

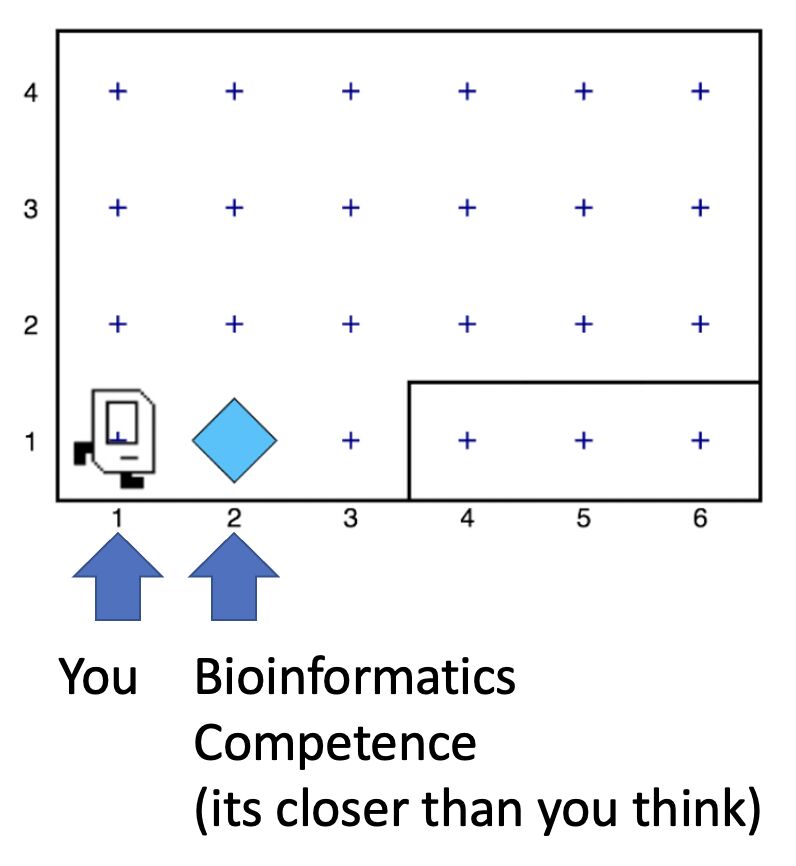

Here is a post I wrote for biologists and team leaders about my journey from wet-lab biologist to biology-leveraged bioinformatician. In short, I think you can do it too, and if you're working in the life sciences, you SHOULD do it too. You can quickly get to a level where you can understand and communicate effectively with your comp bio team, something that is essential for any project that contains any -omics data. To summarize:

- I started with Karel the Robot (link in post). This is the illustration below. It's what every CS106A student at Stanford starts with. It teaches you a surprising amount of general programming principles that I still use today. Importantly, it makes coding less scary.

- I spent a lot of time just trying things (and still do). This was due to the fact that I was initially working with CyTOF data before there were many established best practices and high-level frameworks. Nassim Taleb calls this "convex tinkering" and in my experience, this is better than hand-waving. In the context of bioinformatics, when I try a thing, I am often either wrong or partially wrong about what I thought I was going to see.

- When I am completely stuck on a problem, I solve a simpler but related problem. This is a nice trick to keep the momentum going, and to get me into the flow state. The latter is something essential, if not sacred, to my workday.

Have a look here for more insights and depth: https://lnkd.in/eQ-2BvNn

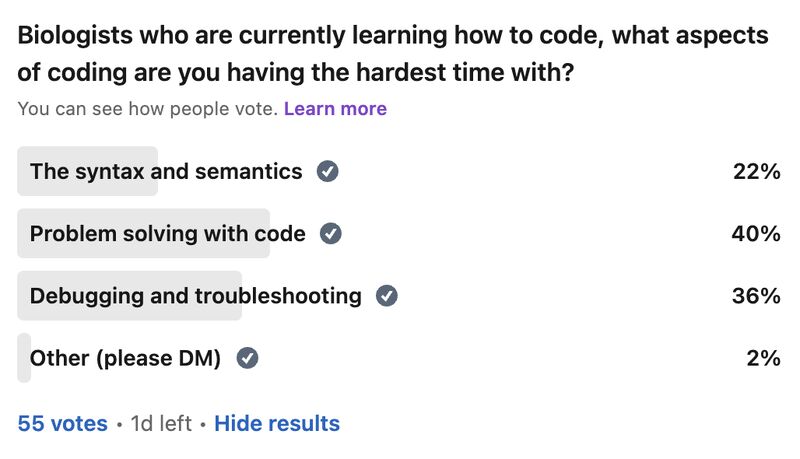

Problem solving as a bottleneck to learning how to code

My survey has revealed that the act of problem solving is a bottleneck for biologists learning how to code. So let me give you a tool that has helped me in the problem solving process over the years, especially when I feel "paralyzed" in the face of a problem:

Simplify.

Sometimes it's simplifying the problem itself, and sometimes it's solving a simpler but related problem. The act of doing so allows you to get some "psychological momentum." What you don't want is to be paralyzed, and not know what to do next.

As an example, I like to tell the story of problem set 3 in CS106A: designing the arcade game Breakout using a Java graphics library. My problem was that even the act of decomposing the problem (standard practice) was stressful, because there were so many pieces that I didn't understand. It was overwhelming to consider everything at once.

So I asked myself, could I make a ball bounce around across the walls. No, too complicated. How about just the game window with nothing in it. Ok. That worked. How about the ball in the center of the screen, in place. Ok, that worked. How about if I could get the ball to move one pixel to the right and then stop? That worked too! Now I was getting some momentum.

It was in that way that I got to a point where I could do the classic problem decomposition and solve the rest of the problem.

So whatever you're trying to solve, try solving a simpler version of the problem, or try solving a simpler but related problem. Keep the momentum going.

More resources in the comments below.

Learning how to code has improved how I think

This image is romanesco broccoli. I came across it sophomore year in my dorm cafeteria. The pattern at play was amazing, but…hard to put into words. When I was learning how to code, I learned the word for the concept at hand: recursion. Learning how to code has given me many instances of this, where I can reason better about something that was otherwise hard to put into words.

In general, learning how to code has improved how I think. It has given me a new lens, the computational lens, through which I can see the world. I wrote and chiseled away at an article over the past year and three months on this topic, and I'm finally ready to share it with you. The article can be boiled down into three main points.

The first point is that in comparison to standard wet-lab biology, coding and bioinformatic analysis often involves the scientific method, sped up. A lab experiment used to take me on the order of hours to days, whereas computational experiments (eg. when debugging, analyzing data) take me on the order of seconds to minutes. Accordingly, you can get intuition around something really fast, as well as go through the process of being wrong, figuring out where you were wrong, and improving your thinking so you're not wrong about it again.

The second point is that computer science allows you to reason about and operate on topics that are otherwise difficult to put into words. An example of this is "levels of abstraction," where I show you what "hello world" looks like in python (not much stuff), C (a bit more stuff), and assembly (a whole lot of stuff), so you can appreciate the sheer volume of things that get swept under the rug when you write print("hello world") in python.

The third point is that in terms of "computational thinking," the computational lens is not meant to replace all other forms of thinking. It is meant to be added to your "latticework of mental models" to use the framing of the late Charlie Munger (link in comments). In other words, you want to be able to look at a problem through as many lenses as you can. I link more material about this in the article.

Overall, learning how to code takes time, so don't fret if you've moving forward more slowly than you'd like. This is normal. This said, I do offer a class to get biologists started with programming, with an in-person option and a virtual option. Any labs who are interested, please feel free to reach out. Otherwise, if you want quick (free) advice, feel free to reach out too.

The image is from the Wikipedia article on romanesco broccoli, by Ivar Leidus, licensed under CC BY-SA 4.0.

The article is here.

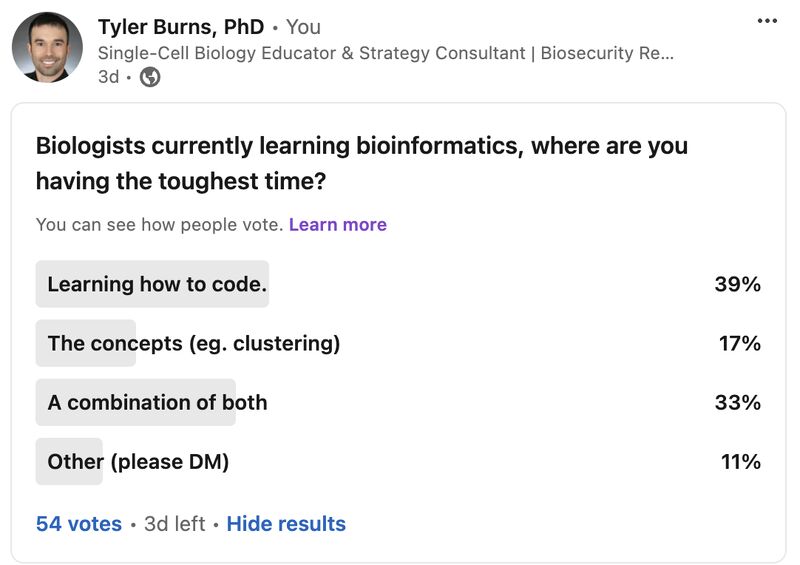

Biologists becoming bioinformaticians are having the hardest time learning how to code

My survey has already revealed that a large bottleneck for biologists learning bioinformatics is the act of learning how to code, even with plenty of online resources, bootcamps, LLMs, etc out there these days. Let me explain why I think this is the case, based on what I've seen and experienced.

For one to do bioinformatics effectively, one must learn how to think computationally. This generally means that one must know how to apply the basic principles of computer science to a problem, like abstraction, problem decomposition, and turning concepts into code. There's a great essay on this idea from 2006 by Jeannette M. Wing that I'll link in the comments.

To learn how to think computationally, I had to learn how to independently write code. What I mean by independently is that when faced with a computer science or bioinformatics problem, I would really struggle with it before looking for some sort of answer online (something that's easier now given ChatGPT, etc). It's the equivalent of doing the math problems in school without looking up the answer in the back of the book first. I still keep up this practice today, trying to independently think/work through a problem before I look at what others have done.

Coding is a learn-by-doing activity. It is not something that you're spoon-fed. You get better with every problem you solve. I started with very small problems and then I worked my way up. It's a lot of work, and it takes time. But proper guidance early on really helps.

One can get started with the foundations of computational thinking in a few weeks with a program called Karel the Robot. It's what every intro CS student at Stanford starts with. It's what I started with. It's what I have people I teach start with. It not only provides a solid foundation but also demystifies what coding and computational thinking is. The concepts and virtues (eg. patience) I learned with Karel the Robot I still use today, ten years later. I'll link a place to get started in the comments.

You can't simply become a code-fluent, computationally minded bioinformatician in a single short bootcamp. But you can develop the right foundations that allow you to effectively move yourself forward from that point on.

I remember what it feels like to be a wet-lab biologist and be totally overwhelmed with this stuff. As such, I have been teaching people how to learn bioinformatics from the standpoint of a wet-lab biologist. Luckily, my availability is going to open up again this summer, so any labs who are interested, please reach out.

Recap on teaching engagement with Zamora Lab at MCW

After speaking with many labs last year, I determined (as many others have) that there is a lack of bioinformatics support in academia. Thus, many biologists are pressured to learn these skills on their own (as if they don't have enough on their plate already). Aside from the additional stress, this can lead to serious mistakes downstream. Anyone who knows about the replication crises in various fields should be concerned at this point.

The good news is, I have also determined that biologists are fully capable of learning these skills. They just need the right guidance. Thus, I have lots of respect for trained bioinformaticians who are going out of their way to teach this material to biologists, and I encourage all of us to teach when we can.

How to do it is a complex topic, and I don't think you can go from neophyte to bioinformatician in a few days. But I think providing the right foundations along with proper followup can go a long way. It did take me a long time to learn bioinformatics myself as a biologist, but it did not take long for me to have a solid foundation from which I could already start adding value.

I saw this first hand with the lab of Anthony Zamora this past week. I spent three days on site with them, and there is plenty of followup planned. If your lab needs training and/or advising, and your local bioinformaticians don't have bandwidth, please contact me. I wish you all the best.

Those who can do, do; those who have done, teach

I am tired of the phrase "those who can, do; those who can't, teach." So let me fix it for you. "Those who can, do; those who have done, teach." Three things come out of this:

- If you have experience in anything (which you do), teach it: Yes, there's a lot more educational content these days, but you are specialized in your own way. Just about everyone I know has something unique to say that has not been formalized or at least put in writing. My grandma had all kinds of wisdom that she sadly never wrote down. Thus, I aim to die with everything on paper.

- Education is becoming increasingly important: in my corner, from cancer biology to bioinformatics, everything is interdisciplinary now. You have physicians talking to biologists talking to engineers talking to computer scientists, each speaking a different "language" and trying to understand each other. One question I'm asking myself a lot these days: how can I teach in a few hours the mental models that have taken me 10,000 hours to really understand?

- Respect for educators: teaching is hard. Communication is hard. You have to figure out a way to operationalize things you may never have put into words. You have to remember what it's like to not know the thing, which may be a long time ago. You have to cater to different learning styles. I don't think teachers (especially in the US) get nearly the respect they deserve.

This can/can't do/teach dichotemy held me back for a long time. I have been in the single-cell world for 12 years now, and I do a lot more bioinformatics teaching now than I used to, borne out of all the experience at doing bioinformatics. It has way more impact, and I love every minute of it.

If you're a student, postdoc, tech, or scientist in academia or industry, DM me and I'll give you 15 minutes of free advice about single-cell bioinformatics, any sub-topic you want. Or just say hi. I have nothing to sell you. My paid teaching/training services go to the PIs and group leaders: if you want me to set up a more formal bioinformatics workshop or advisory role for your group/lab, DM me and we'll talk. Site visits are on the table.

If you know anyone who could use this post or my teaching/advice, please share it. I hope you all have a great day.

Journal club and related

Sometimes I read papers and like to talk about them.

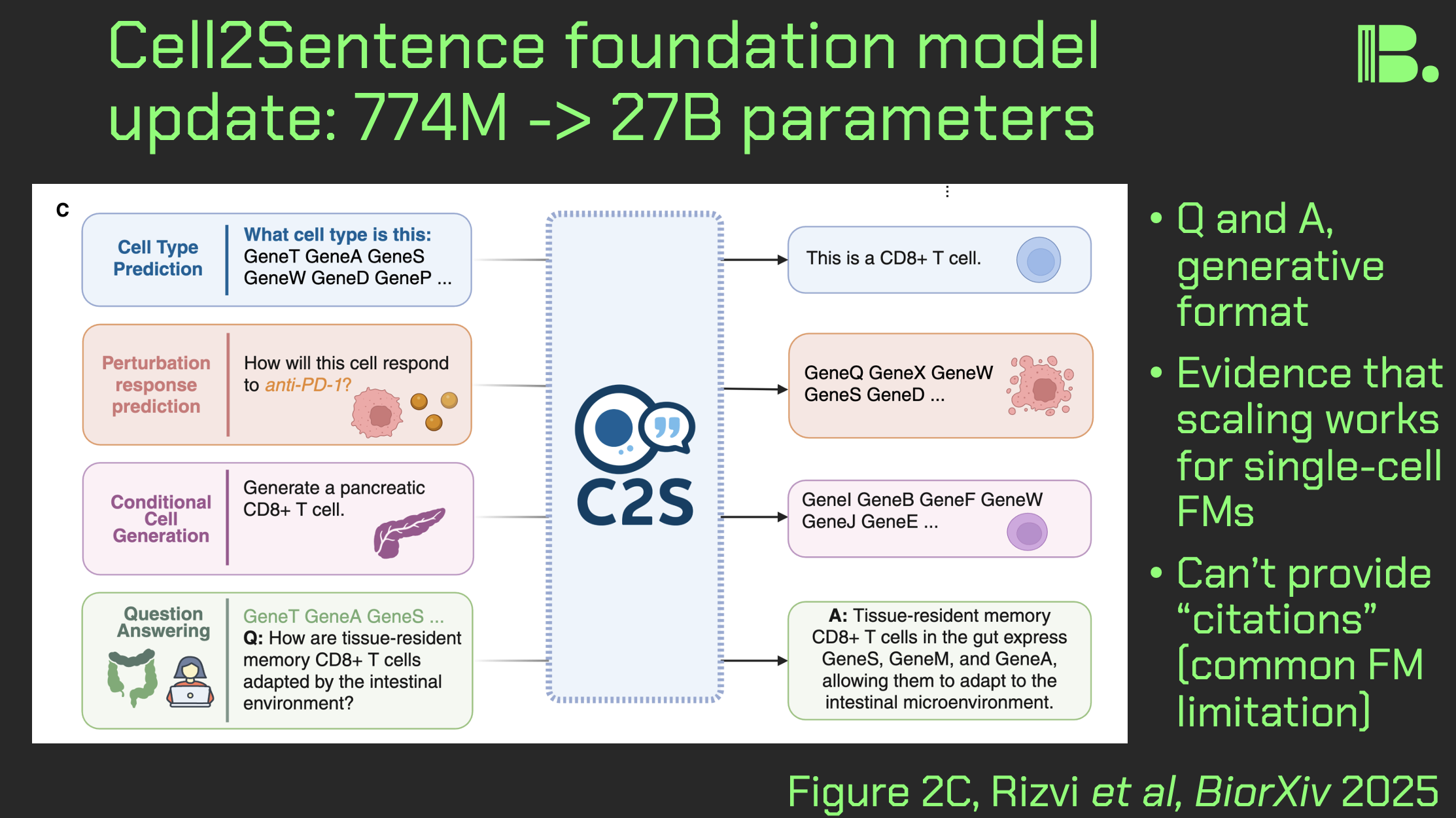

Cell2Sentence update: 27B parameters

As a followup to my earlier posts about single-cell foundation models, I wanted to do a post-length journal club on a recently updated cell2sentence (C2S) model, that now has 27B parameters, and now has some spatial capabilities. Here is what stands out and what it means for you:

Some spatial reasoning capabilities:

Figure 6 shows C2S being used to predict niche neighborhoods for CosMx data. CellPhoneDB and BioGRID interaction data were folded in, which gave an accuracy of 70% (to 55% if C2S alone). This is interesting, and it does make me wonder how far spatial reasoning can be pushed.

However, I also wonder whether it would just be easier to loop in a foundation model trained only on spatial data for spatial tasks rather than trying to make an everything model. I covered one such spatial model, KRONOS, last summer.

Querying, predictions, but no citations:

Figure 2C shows that this model in particular has cell embeddings (like UCE which I recently covered), but also has LLM querying capabilities. This is because part of the training process is converting single-cell data into long "sentences" consisting of a rank-ordered list of genes by expression, per cell. This gets mishmashed in with data from publications.

Figure 9 shows that this scaled-up model in particular was able to make a validated prediction about kinase inhibitor silmitasertib that anyone in pharma might want to take a look at.

This said, the frustrating thing is that the answers do not have "citations" back to the training data (such is how these things work). As a NotebookLM user, this kind of thing frustrates me. Maybe the model could at least have a post-hoc matching function that would pull forth datasets and abstracts from whence the answer most likely came.

Benchmarking AI models based on latent spaces of other AI models:

Such a matching function might having the output and the training data embedded in the latent space of some other model, and doing a nearest neighbor search. Such an idea shows up in the benchmarking section of the paper…

The authors have evaluation metrics in the paper, that actually require the latent space of other foundation models. One way to understand this, is to think of how you would determine how lexically close two sentences were to each other, perhaps in comparison to another pair of sentences. You'd throw these sentences into a BERT embedding (see my TED talk) and measure the physical distance between points in the embedding space.

This is called the BERTscore, and you can see it's use in Figure 4C and 4D, where they evaluate the "scaling" of the model.

The point is: you have an evaluation metric for AI models, which is really a distance function within an embedding space of another AI model. This is a clever idea, but I just hope that in 10 years we won't have evaluation metrics that consist of the latent space of another model, evaluated on the latent space of another model, all the way down.

Conclusion:

As I said in the KRONOS post four months ago, I am waiting for the possibility that foundation models have their "GPT-3 moment" where with enough scale, they are suddenly in every lab everywhere.

This said, it's pretty much impossible for me to evaluate every the quality of every dataset that goes into these models. So I intend to use these things as hypothesis generators, but also to stick with the old fashioned way of looking things up myself. A sort of barbell strategy between old and new.

Aside from that, the model is runnable on google collab (though I haven't gone beyond the starter code they give you), and you should try it. Thank you and I hope you all have a great day.

The pre-print is here: https://www.biorxiv.org/content/10.1101/2025.04.14.648850v2

Thoughts about ESSB 2025

I had a great time at ESSB2025 in Heidelberg, where I caught up with and met great spatial bio researchers, and got up to speed on the bleeding edge of both wet lab and dry lab practices. A few things that stood out to me:

Very high -plex:

There was a talk that had an antibody panel of 1133 proteins. Up until this point, I had seen at most a few hundred. With my roots in CyTOF, I know how much work can go into building a panel of 30 antibodies, so I think antibody validation might be a bottleneck here unless we have robotic arms coupled to vision transformers doing 5-point titrations and such (now there's a startup idea).

Multiple modalities:

There was an interesting focus on metabolites. One direction was pure mass spec. This gets you a large number of readouts, but you don't yet get single-cell resolution. Another interesting strategy I saw was the construction of aptamer-oligo conjugates that bind to metabolites, that can in turn be sequenced. Think CITE-seq for metabolites.

Analysis, appearance versus reality:

One talk emphasized how hard it was to segment microglia in particular because of their weird shape. Thus, a lot of it was done manually. This is despite the fact that there is a perception that segmentation is a solved problem with all the AI developments. It is not.

From 2-D to 3-D:

There were a number of talks that went into 3-D imaging (e.g. making a bone clear so you can "fly through" it after you stain), and one where an interesting 2-D image observation needs a 3-D image as followup. There is a whole world of anlaysis problems to solve here when this comes to fruition, from new algorithms to data management. Might be worth doing some theoretical work here. And along those lines…

Math and human interpretability:

One talk was using topology to create human readable analysis outputs. This group has a "product market fit" at their institute right now because they were working out the theory behind a lot of the current issues (multiple modalities, etc) 5 years ago. This leads to both human-readable output, and interesting parameters that can be fed into foundation models and the like. So for the rest of us: what types of data are inevitable, and what can we do now to prepare for that? It will help to have mathematicians in your circles for this theoretical work.

Conclusion:

There is absolutely a gap between what you learn at conferences, versus what shows up on the open internet. In sum, this conference gave me a better impression as to what is going on in the spatial field than any papers or anything shared on social media has given me. I look forward to seeing how things develop between now and the next conference from the European Society for Spatial Biology (ESSB) e.V. in Barcelona.

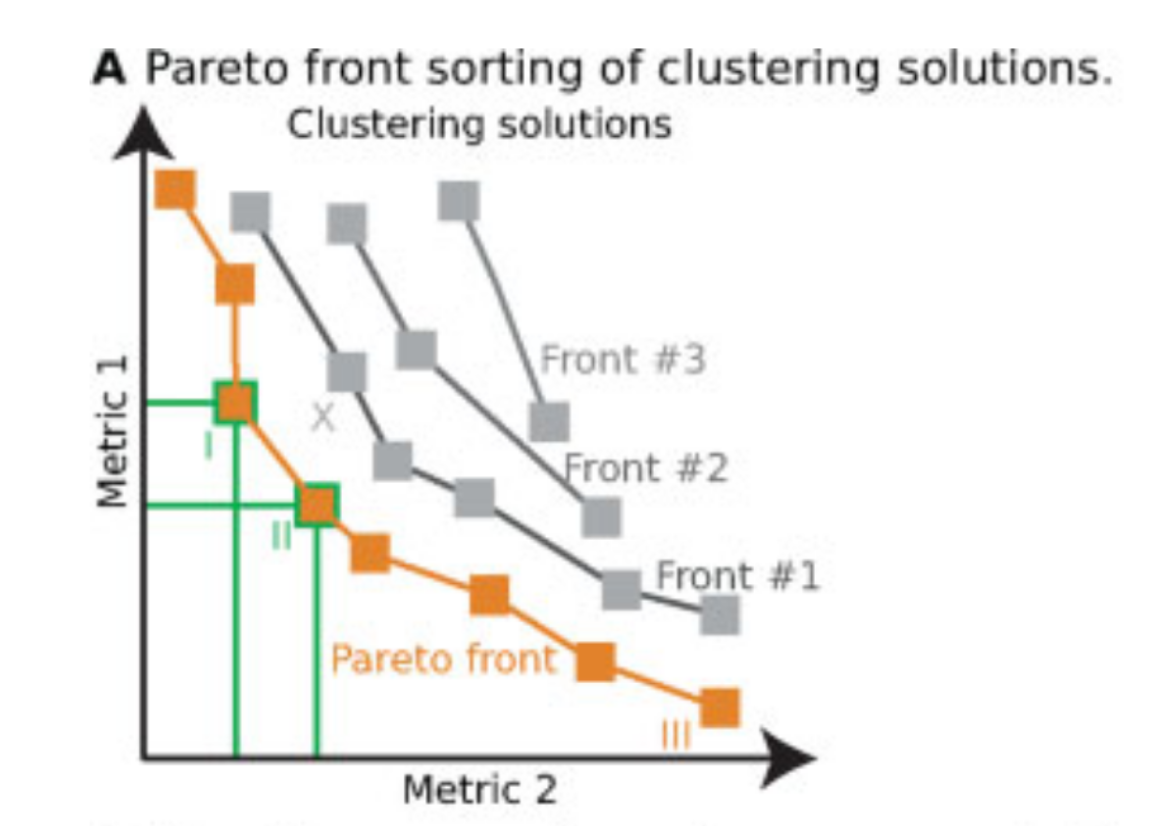

Pareto fronts for cluster benchmarking

In light of recent benchmarking work I've been doing, I was introduced to a paper that uses Pareto fronts to look at multiple metrics, rather than one at a time. This helped me think through some issues I've been dealing with in dimensionality reduction, where local and global preservation present as tradeoffs.

In short, Putri and colleagues (link in the comments) look at what the maximum possible scores are along these tradeoffs. This is the Pareto front (see figure 1a from the paper in the image below).

The authors use this type of analysis for cluster benchmarking. They look at FlowSOM, PhenoGraph, and their own method, ChronoClust. They look at the Pareto front of four evaluation metrics.

They note that their ChronoClust method underperforms, which shows that they are doing a critical evaluation, as opposed to trying to promote their own tool.

The bigger picture:

- If you're benchmarking and using metrics where there are trade-offs, consider using a Pareto front-based approach.

- You should probably be benchmarking your pipelines internally. At the minimum, look at what public benchmarking dataset most closely "maps" to your internal data, and look at the benchmarking reports for that dataset.

Thank you David Novak for bringing this paper to my attention.

I hope you all have a great day.

comment

Paper is here.

The KRONOS patch based spatial foundation model

If you do spatial, you know QC is a headache. There's a new spatial foundation model called KRONOS out this week that can help with that. I read the pre-print and spoke with the corresponding author. Here is what I found:

Quick overview:

This foundation model is a vision transformer (similar to the LLMs, but can "see") trained on high-parameter imaging datasets (e.g. Akoya CODEX). Specifically, it is trained on "patches" rather than cells: 47M patches, across 175 protein markers, across 8 imaging platforms. The interesting thing here is that operating at the patch level can bypass cell segmentation entirely. This helps avoid a lot of headache for the personnel involved, and allows for the capture of nuances (e.g. in neurons that are not perfectly segmented).

Deep dive:

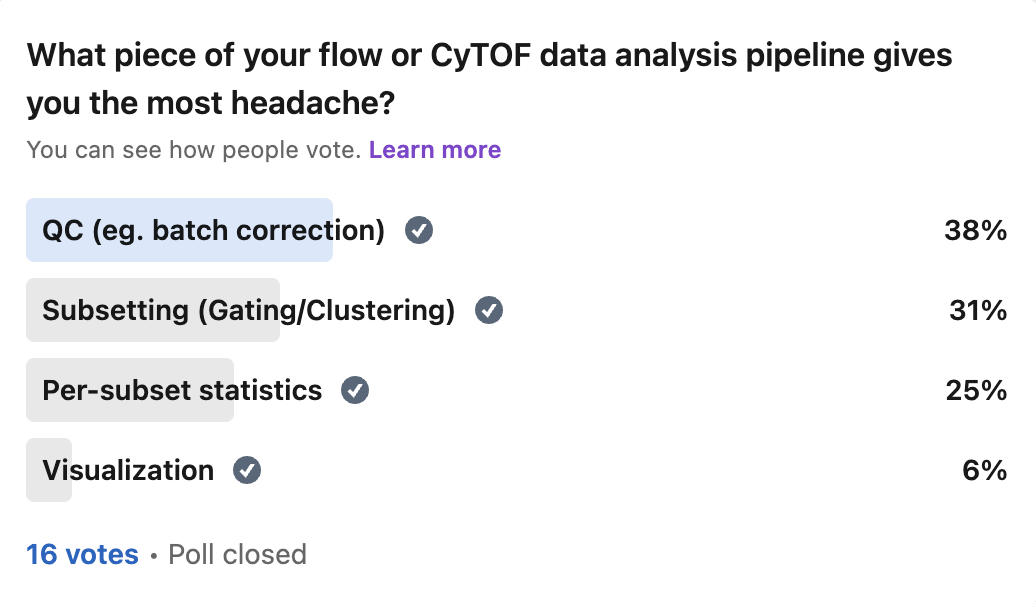

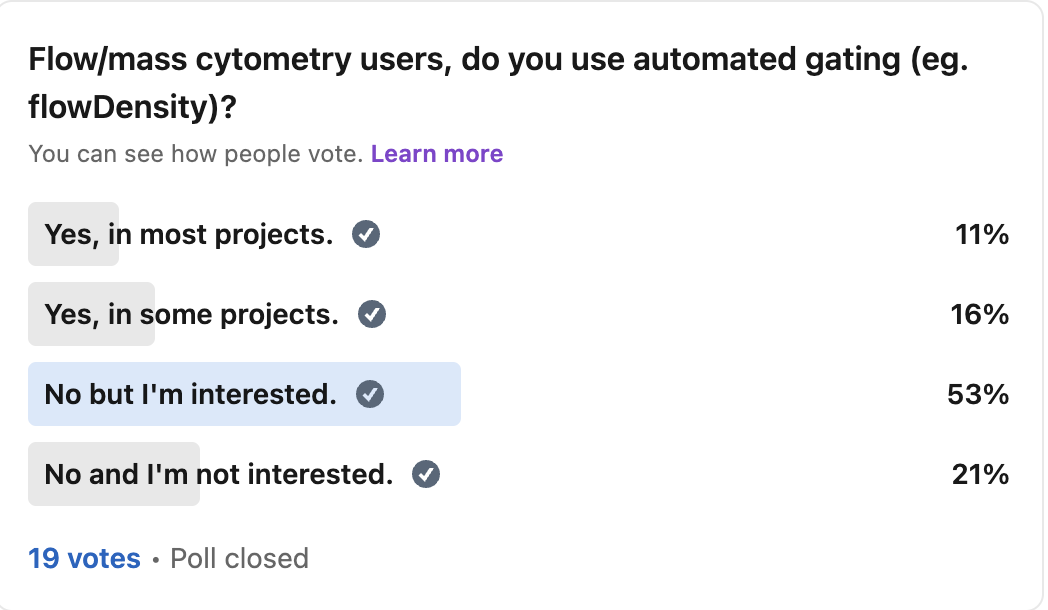

The part that I was drawn to was Figure 3G-I in the paper (see image), which shows the model detecting and flagging artifacts. These are problems with the image that would harm downstream analysis, like tissue folding, blurring, or signal saturation. This model can be inserted into the quality control section of a pipeline and assist in detecting these issues early on. My previous LinkedIn surveys on single-cell analysis suggest that my audience has much more "headache" around quality control than downstream analysis (and it's the same for me), so I think this is definitely for you.

Why I take this seriously:

While I have yet to use this model myself, I spoke with one of the corresponding authors Sizun Jiang directly about this, whom I have known since my grad school days. He told me that the model is becoming a mainstay in current analysis pipelines in the lab, and is being used to augment the analysis of previous datasets and ongoing studies. In other words, there is skin in the game here: the better the model, the better his lab's research output, which leads to better publications and more meaningful clinical discoveries, which leads to more grant funding and talent to work on these key questions, and so forth.

The big picture:

If you are a researcher or a leader, you should look into at least trying these models out, and getting a feel for how they work. Benchmark them to existing analysis pipelines. If you've seen my other posts on foundation models, you'll know that this field is growing fast in single-cell. Now they are being developed in spatial.

You'll also know my hypothesis that given the parameter size of the foundation models right now, they may still be equivalent to GPT-2 on the LLM side. Things like larger model architectures or richer training data (e.g. more tissue types, multi-omics) could lead to a "GPT-3 moment." So I would get familiar with these models before we hit this inflection point.

What I need from you:

Let me know what the most absolutely annoying aspects of spatial QC are for you. Be specific. If I'm going to use this model and similar for my spatial work, I want to direct it toward the nastiest issues and take it to its limits.

comment

The KRONOS pre-print is here: https://www.arxiv.org/pdf/2506.03373 The GitHub is here: https://github.com/mahmoodlab/KRONOS

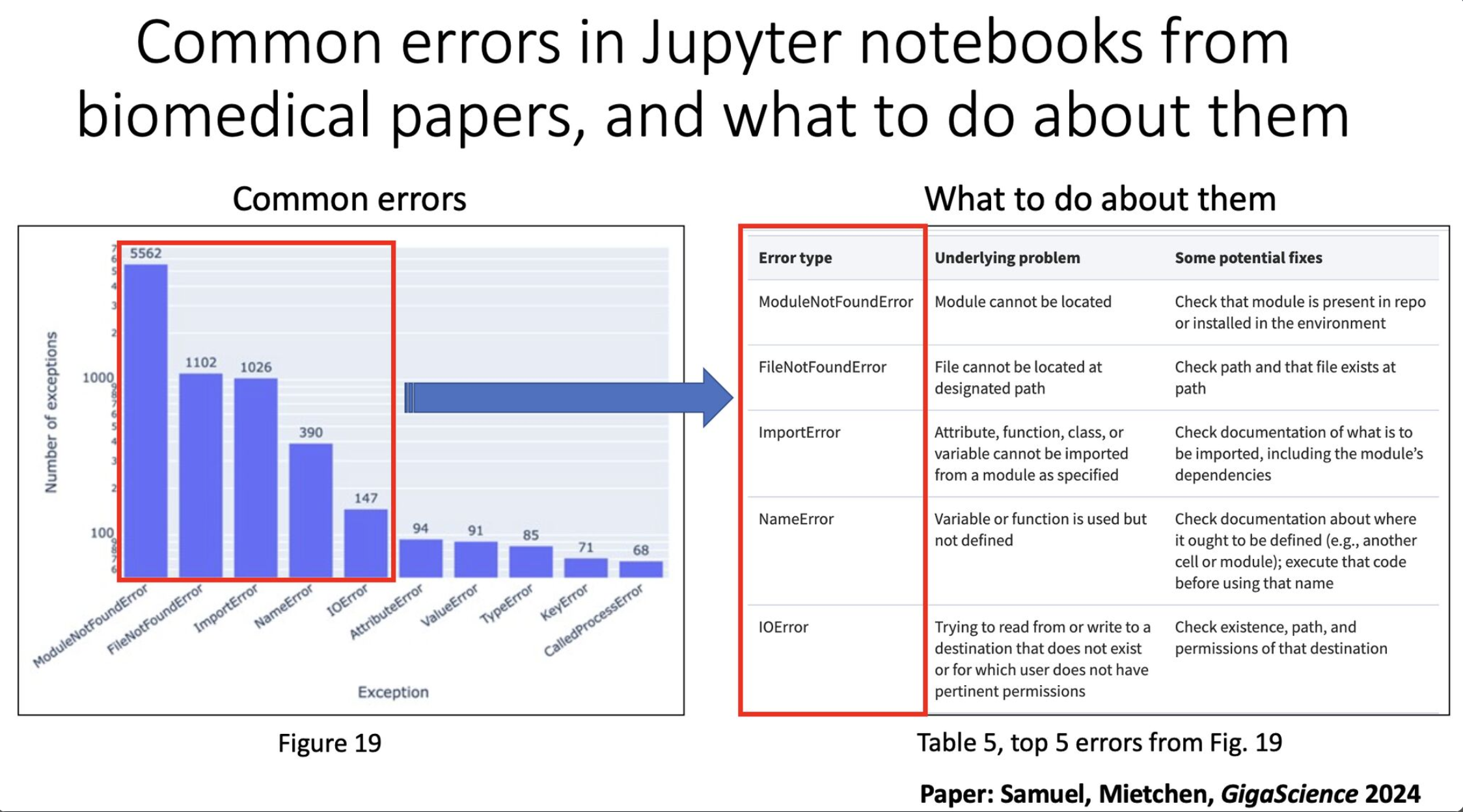

Reproducibility of Jupyter notebooks from biomedical publications

In light of recent work I am doing that requires me to reproduce results from GitHub repos associated with papers (eg. foundation models), I wanted to highlight a paper by Sheeba Samuel and Daniel Mietchen that discusses reproducibility of Jupyter notebooks associated with the biomedical literature (peer reviewed papers, not pre-prints). The results are nothing to be proud of.

The authors looked at 27,271 Jupyter notebooks across 2660 GitHub repos linked from 3467 publications.

Specifically, the authors looked at:

- 22,578 Jupyter notebooks written in python. Of these:

- 15,817 had dependencies declared. Of these:

- 10,388 had dependencies that could be installed successfully. Of these:

- 1203 notebooks ran without any errors. Of these:

- 879 produced results identical to those reported in the original notebook, and

- 324 produced results that differed from those reported in the original notebook

In other words, 5.3% of notebooks ran without errors, and 3.9% produced results identical to the paper.

One thing (of many) that the authors bring up, and what struck me here, is that the results suggest that the available code had little bearing on the peer review process. And perhaps it should have.

From a practical standpoint, I've assisted in peer review, and I understand that the reviewers simply don't have time to dig into the code themselves. So there should probably be ways to make this easier.

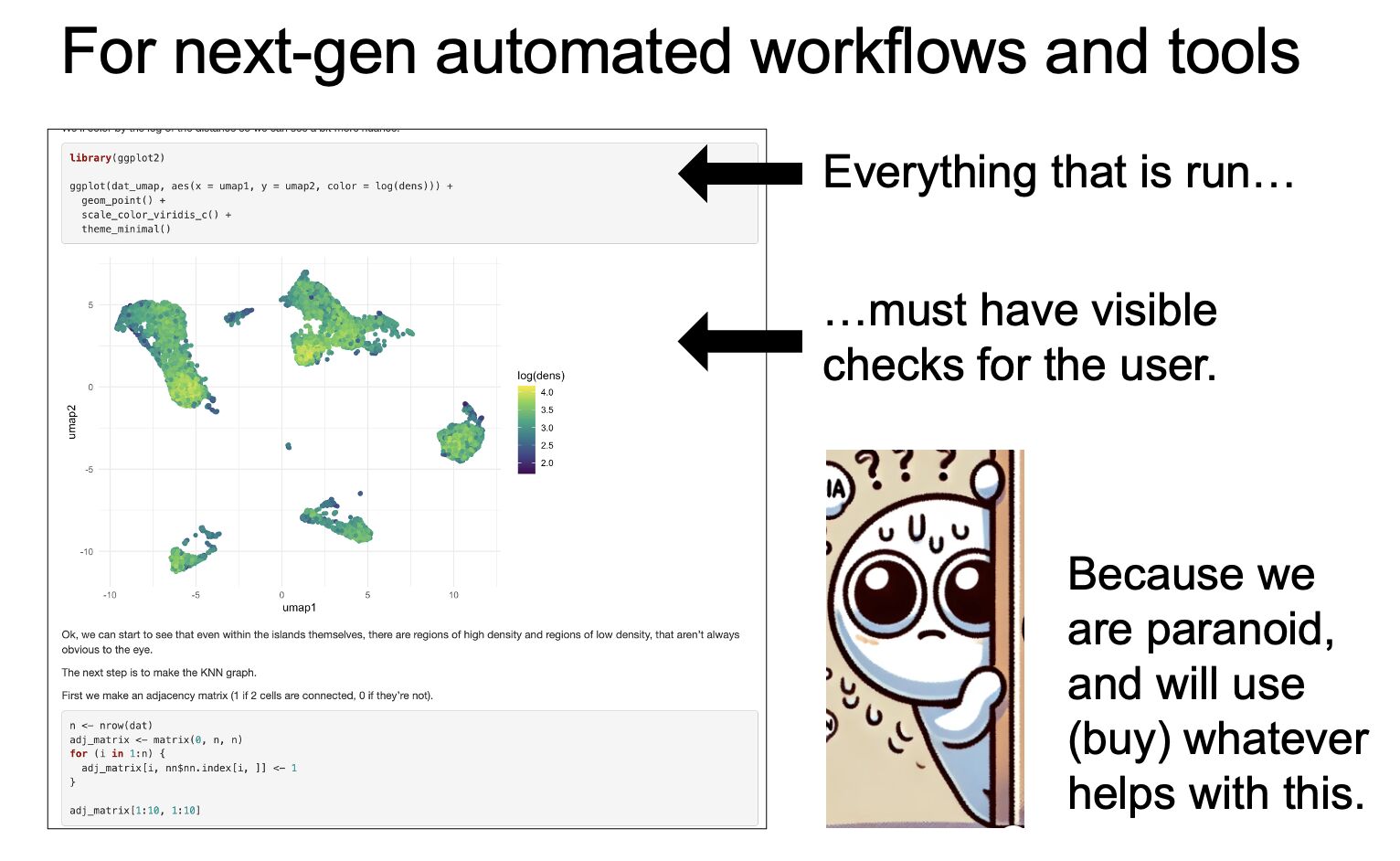

I think ensuring reproducibility of code in papers could be something that automated tools could do or help do down the line. The methods section of the paper is a testament to this. Given the current "agentic" direction AI is going, this would be an interesting use case to either aid in the peer review process, or be used by the authors themselves to ensure reproducibility at every step of the process.

I'll note, given that I use R heavily and therefore use R Markdowns moreso than Jupyter notebooks, I hypothesize that there will be similar issues here. But an important observation from the paper from Figure 19 (attached image, left side) is that the majority of problems were ModuleNotFoundError. This suggests that issues with dependencies cause a lot of the reproducibility problems, something that would generally not surprise python users. R is not without its problems in this regard, but this is especially notorious in python.

If you are a biologist interested in how to ensure reproducibility in your code, please let me know. My friends and I have been through enough of this that I have things to say. If enough are interested, I'll make a more in depth write-up.

Until then, be sure to use virtual environments (I use renv if in R), and in python be sure to run "pip freeze > requirements.txt."

The link to the paper is in the comments. You should read it. There are 30 figures and 5 tables. In the "implications" section they bring up nine talking points (and the peer review bit above is implication 2).

That's all for now. Happy new year everyone.

comment

The link to the paper is here: https://academic.oup.com/gigascience/article/doi/10.1093/gigascience/giad113/7516267#493978474

And thanks to Mike Leipold for finding this paper and sending it over.

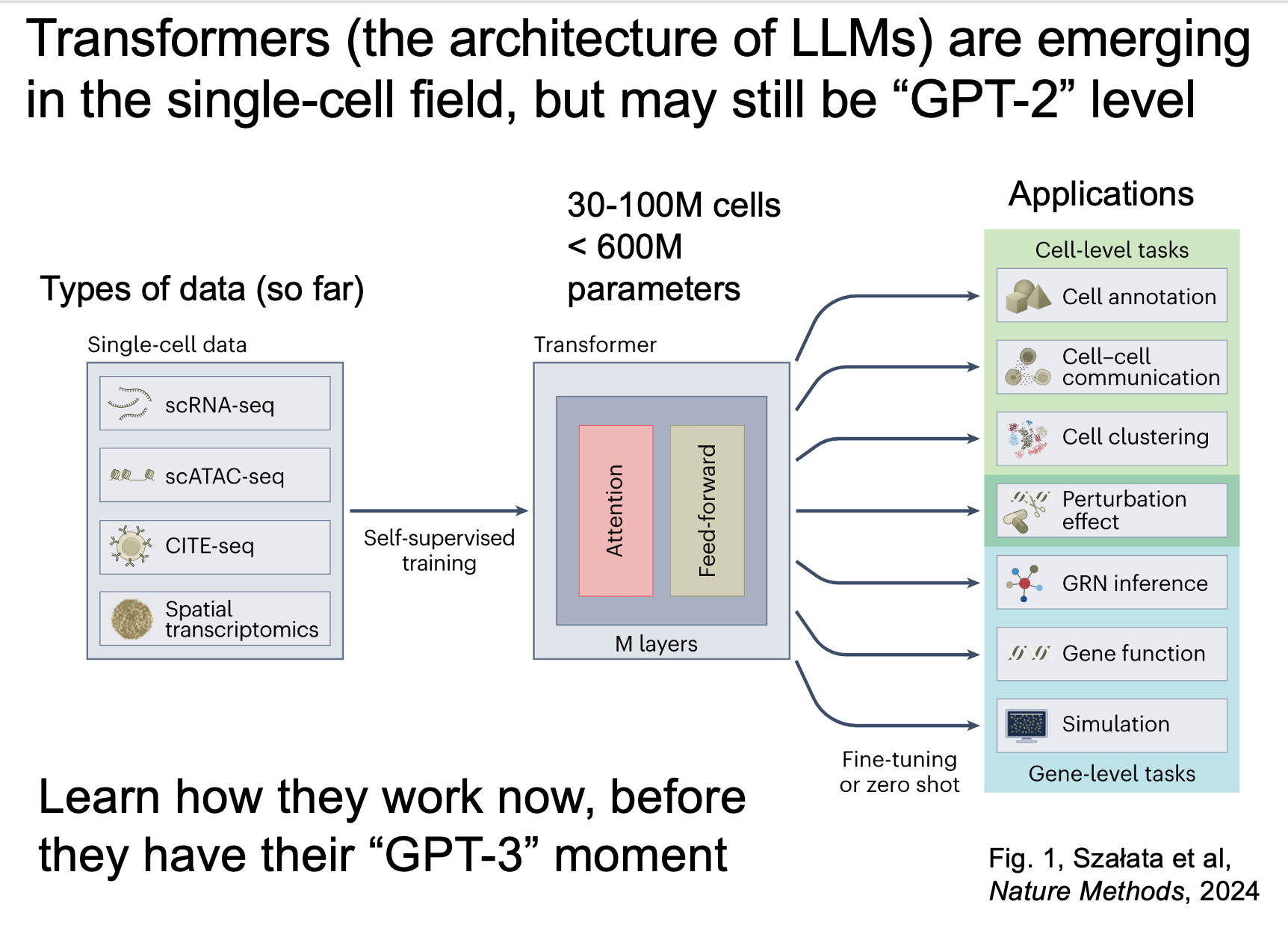

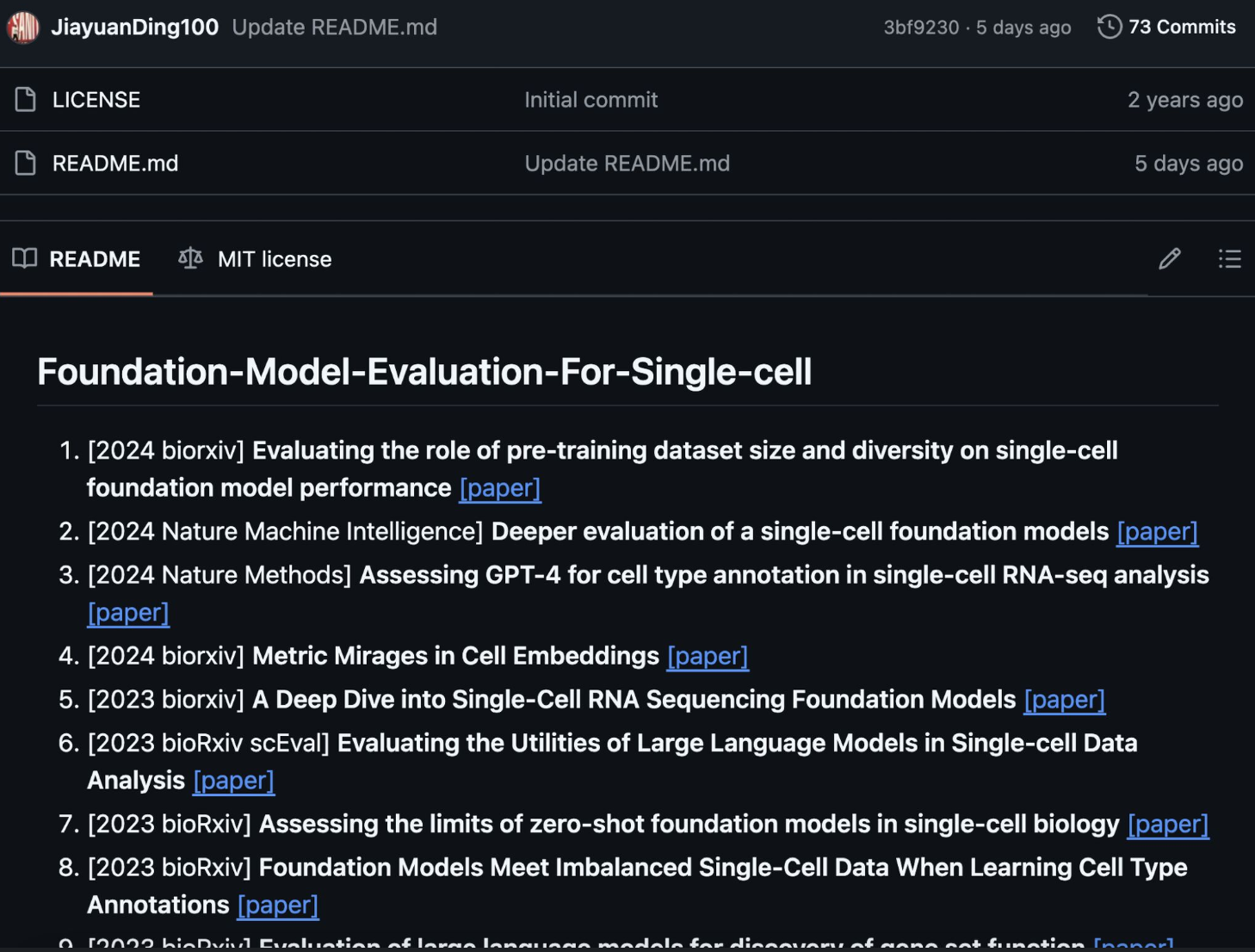

Review on single cell foundation models

Transformer-based foundation models (the stuff of LLMs) are slowly working their way into the single-cell literature. Here is what to know and what to do about it.

For this post, I draw from a neutral review from Artur Szalata and colleagues (last author: Fabian Theis) on the topic, and additional time I have spent testing these models myself. Below are three main points from the paper, and my take on each of the points, followed by a take-home message to make all of this actionable.

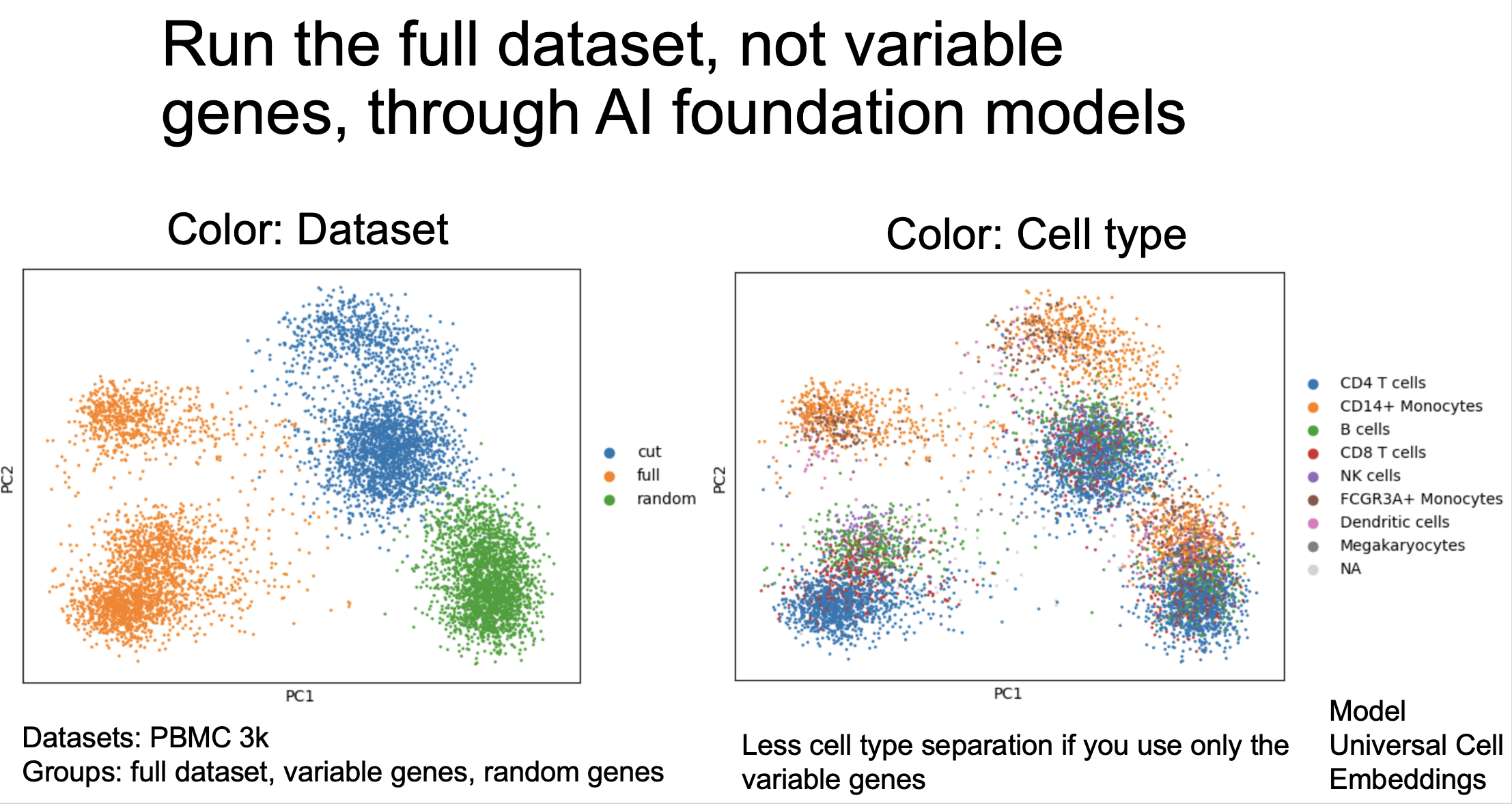

These models are still quite small. Table 1 shows that most of the models reviewed were trained on 30-100 million cells, which translates to hundreds of millions of parameters. Transformer models in other fields are well into the hundreds of billions of parameters (GPT-3 was 175B).

My take: the single-cell models here might still be analogous to GPT-1/2, where they show some promise but the full potential is still down the road.

These models serve are multi-purpose tools, in that they have many applications. These include cell annotation, gene function prediction, perturbation prediction, and inferring gene regulatory networks, among others.

My take: once these models have their GPT-3/4 moment, there will be many new things for us to play with and integrate into our workflows.

There are applications that are still more suited for simpler solutions. An example of this was scTab, a non-transformer model that outperformed scGPT (a transformer model) in cross-organ cell type integration.

My take: from a practical standpoint, I try the simpler solutions first, but in this context, later models trained on more cells could prove to be superior. So I'm keeping tabs on this.

I remember when I got early access to GPT-3 in the fall of 2021 (a year before ChatGPT), experimenting with it quite a bit, and simply making sure I was familiar enough with it that I could rapidly adopt it if it got any better. Now, I am spending time working with some of these available foundation models to see what they can do in my hands.

You can get access to these models too by going to Chan-Zuckerberg Initiative's collection of census models for single-cell (link in comments). They provide links to the model pages and sample embeddings that the models produced.

The take home message for leaders and scientists:

Know how these models work, have some of these tools in your arsenal, and test what kinds of inputs they take and what kinds of outputs they can produce. Keep tabs on their developments. Take their results with a grain of salt, but know that they will get better. I assume that they will only improve from here, as the research around these models improve, and the number of parameters possible per model increase.

The review and a markdown of me interrogating one of these models is linked in the comments.

If any of you are currently tinkering at the interface between single-cell/spatial and transformer models, please let me know. I hope you all have a great day.

comment

The review by Artur Szalata and colleagues can be found here: https://pubmed.ncbi.nlm.nih.gov/39122952/

A page from CZI giving you starter code for a number of so-called "census models" which are essentially cells that have been run through transformer models, giving you access to the embedding: https://cellxgene.cziscience.com/census-models

Me interrogating the geometry of a foundation model embedding by trying to find its "center" and "outer edges" and realizing that UMAP does not quite capture this. https://tjburns08.github.io/human_universal_cell_embeddings.html

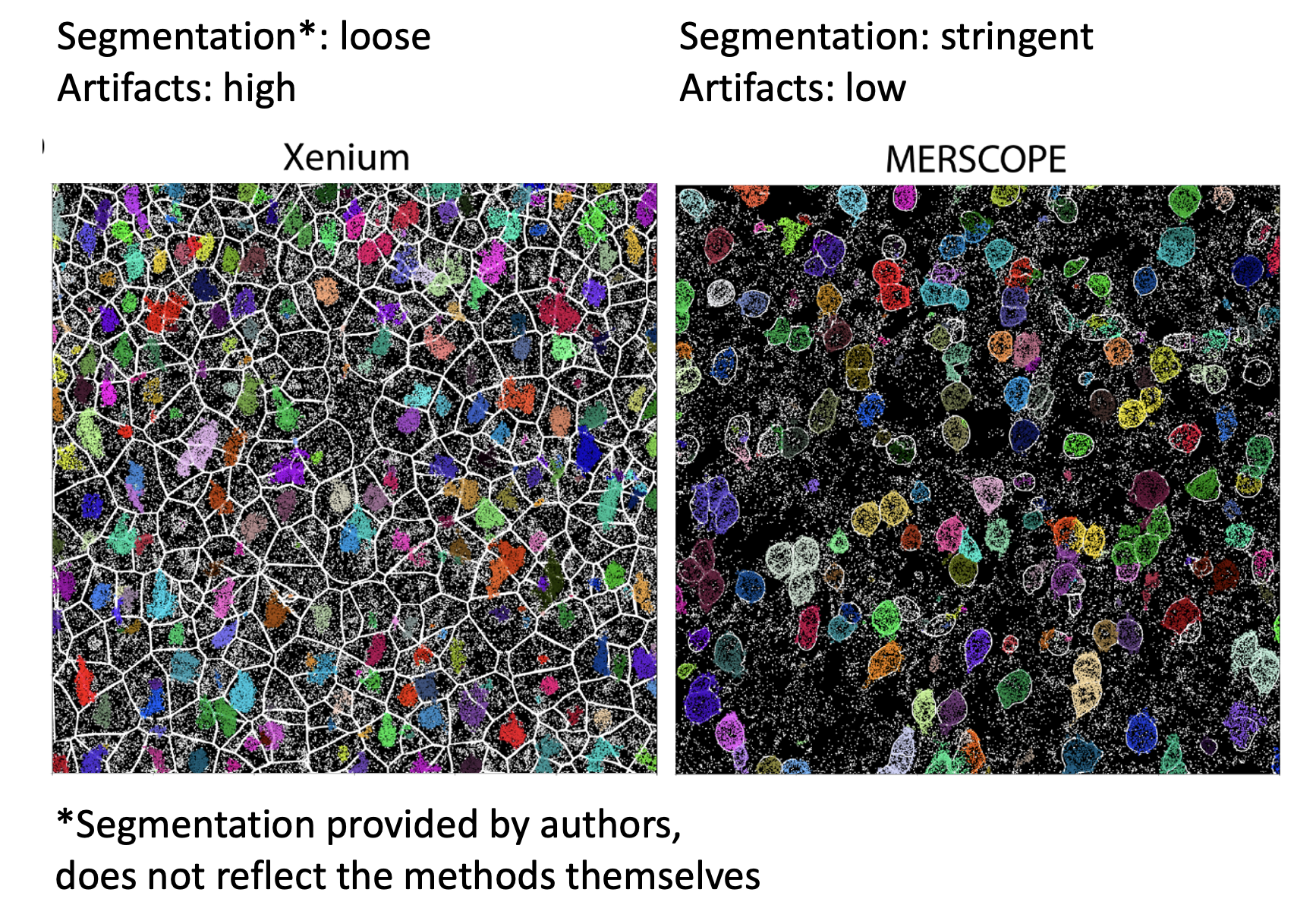

Cell segmentation size matters for spatial transcriptomics

For spatial transcriptomics data, cell segmentation size is critical. I recently read a 2024 preprint from Austin Hartman and Rahul Satija about benchmarking in-situ gene expression profiling methods (eg. 10x Xenium). There's a detail in here I was struck by:

One of the issues with making the comparisons between spatial methods was that the default cell segmentation provided by the authors of the datasets used varied between stringent (only cell boundaries you're sure of, tightly demarcated, small), and not stringent (something of a Voronoi tessellation, with loose and large boundaries). This can be seen in the image below, which comes from Figure 3 (link in comments).

The differences in cell segmentation led to artifacts in gene expression, as measured by what they call the mutually exclusive co-expression rate (MECR). This is where genes that are biologically not expressed together in a cell are nonetheless both expressed. They had to re-segment the cells themselves in order to move forward with the benchmarking.

This means two things. The first is when you're comparing spatial datasets across methods (eg. Xenium vs MERSCOPE), you need to re-segment the cells with the same method and stringency first. The second is that you need to pay close attention to the stringency of cell segmentation when you're doing any sort of spatial analysis, as it has been shown that artifacts can show up in this step.

Do your biological conclusions change if you run the pipeline with loose vs stringent cell segmentation?

The bigger picture is that in bioinformatics (and data analysis at large), the devil is in the details. It's all the little things you have to do to make sure the data are ready for the clustering and whatever else you're going to do.

If you're in leadership, make sure your team is spending sufficient time on the early stages of data analysis (eg. QC, cell segmentation, batch effect finding, data integration). The "headache" steps that seem to delay the insight generation steps. As Marcus Aurelius said, the obstacle is the way.

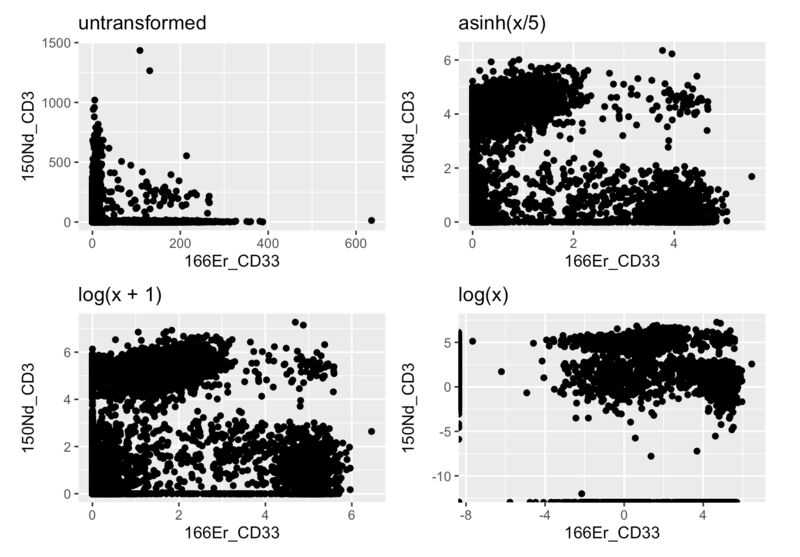

If you're learning bioinformatics, spend as much time as you can really understanding the raw data. One way to do this is to try to analyze your data outside of any standard package, or take a page from molecular biology and KO (remove) a step in the pipeline and see what happens (eg. what happens to the clustering and UMAP if you don't log or asinh transform the data).

As the datasets and methods get more complicated, these little details will become more important. I hope you all have a great day.

Link to paper.

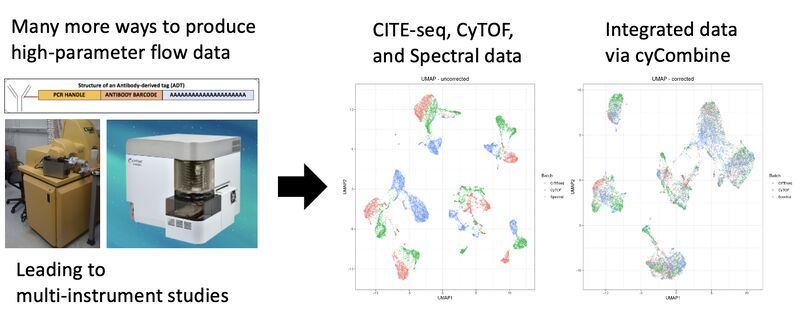

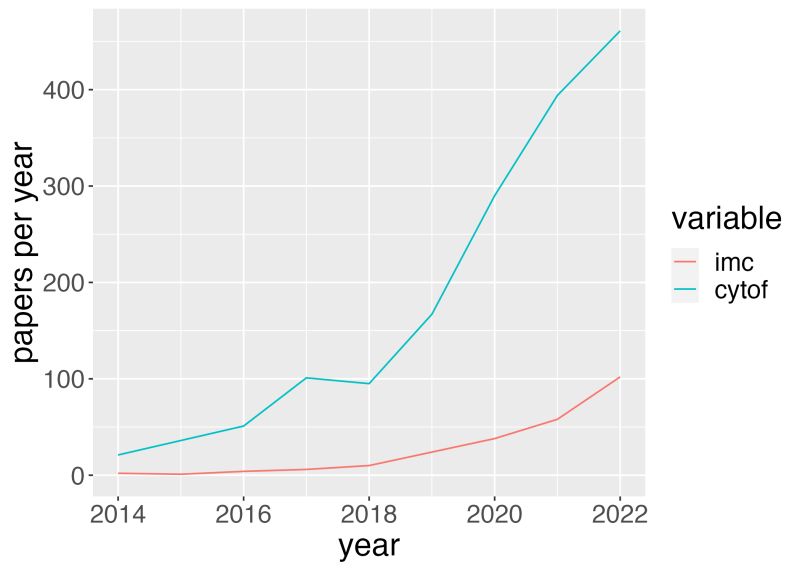

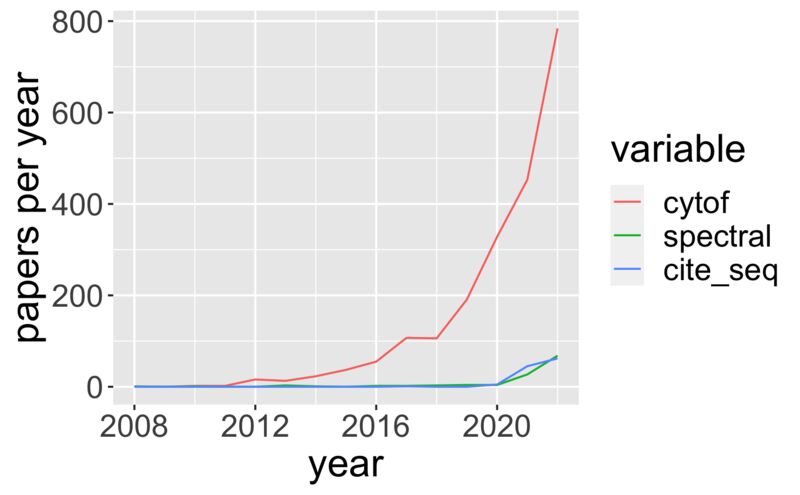

Data integration using CyCombine

Single-cell protein data can take many forms: flow cytometry (spectral or otherwise), mass cytometry, CITE-seq, or protein-based imaging after cell segmentation. Not to mention the multitude of machines (eg. spectral cytometers from different companies, or CyTOF 2 vs CyTOF XT). It is inevitable that there will be a need and efforts to integrate these datasets across modalities to derive actionable insights.

Accordingly, the Single Cell Omics group at Technical University of Denmark (DTU) has solved this problem with a method they call cyCombine. With this method, they are able to integrate a CITE-seq, spectral flow, and CyTOF dataset. They spell it out in a markdown (link in comments) so you can try it yourself.

The UMAPs in the images show that the data, otherwise separate, now sit on top of each other. There are further metrics for evaluating the correction in the markdown (eg. earth mover's distance), and histogram visualizations. If I were using this, I'd want to try gating on the concatenated data, with the points in the biaxials colored by each method.

To sum things up, there is good work being done in this space, and we should be paying attention because this type of work is going to become much more important as high-dimensional cytometry and cytometry-like methods and instrument types increase.

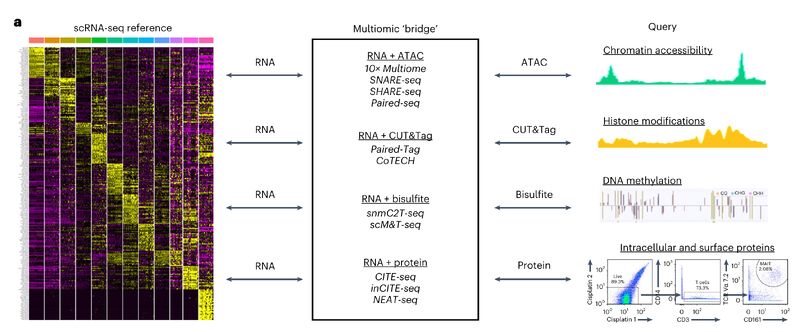

Bridge integration

Leaders using single-cell tech: do you have data across multiple modalities (eg. flow/CyTOF and single-cell sequencing) that you want to combine? Are you making large cell "atlases" internally or externally? Then you should consider integrating these datasets with bridge integration, a new method that came out last year. How does it work?

Say you have a CyTOF dataset, and a single-cell sequencing dataset. Both are PBMCs. If you have a CITE-seq PBMC dataset (both RNA and protein), then you can use that as a multiomic "bridge" to integrate the two datasets. This is one reason why getting your team to produce a CITE-seq dataset or two might be valuable in the long term.

The image attached is a schematic from Hao et al. (link in comments) that shows possible combinations of multimodal integration that go beyond RNA + protein. The method is available in Seurat (in other words, it's standardized and accessible for comp bio). Your team should look critically at figure 5 and S7 in the paper and the text that references it (the page immediately after the figure), as it shows a scRNA-seq + CyTOF integrated dataset using this method, with the text describing sanity checks.

Even if you don't use this method, you should note the emerging trend of integration across modalities, which goes along with the emergence of single-cell multi-omics. Importantly, the authors express interest in doing this with spatially resolved data. They specifically mention CODEX (paragraph 4, discussion section), suggesting that a CODEX + scRNA-seq integration might be a current PhD/Postdoc project in the lab.

Links to the paper and Seurat code in the comments below.

Flow/CyTOF users could take a page from the best practices in single-cell sequencing

Life science leaders using flow/mass cytometry: do you want to know where the best practices in data analysis will be in 3-5 years (if done right)? As a flow/CyTOF native, I've been looking to single-cell sequencing for this. Here are 3 things that I think this community has gotten right, that the flow/CyTOF world (that I’ve been part of since 2012) could really benefit from:

A dedicated open-source community with well-maintained packages.

On the R side, Seurat is extremely useful, constantly evolving as new methods develop, and well-maintained by the Satija Lab. On the python side, there is scverse, which is a collection of tools that do various things from single-cell sequencing analysis (scanpy) to spatial (squidpy).

My recommendation: we model our ecosystem after scverse (bring it all together in one place) and our "end to end" packages after Seurat. Those working with ISAC and similar organizations should dedicate funding to dedicated individuals. I think with efforts like CyTOForum, the community is in place to do this kind of thing.

A focus on standards and benchmarking

There's a "single cell best practices" consortium that has a huge free jupyter book, showing you what to do with the scverse and how. Furthermore, there is a lot of benchmarking work happening, e.g., with the scib package from the Theis Lab, that allows you to do your own benchmarking for your data. Long-time flow/CyTOF users will remember the uncertainty around which clustering algorithm to use, that didn't clear up until Lukas Weber and Mark Robinson (from the sequencing world) did a benchmarking study and showed that it was FlowSOM all around and X-shift for rare cell detection.

My recommendation: we incentivize benchmarking studies (eg. the FlowCAP project). Especially given the advent of spectral flow, we are going to need an efficient way to redo or build on our prior work as the tools and data evolve.

Integration between commercial and open-source methods.

10x Genomics has a UI for its Xenium data. Then they have a page titled "Continuing your journey after Xenium analyzer" listing relevant open-source tools that can help you analyze your data further. Similarly, on the flow/CyTOF side, with Standard BioTools is promoting Bernd Bodenmiller Lab's HistoCat on their page as something to use beyond their UI for IMC data.

My recommendation: we build our commercial tools with our open-source ecosystem in mind. I think Omiq's modular design and ability to quickly integrate the latest open-source tools into its interface is a great example.

I'll acknowledge that there are differences between the fields that may impact what has and can get done, like open source community engagement levels, available funding, and the relationship between open-source and commercial solutions in either domain. However, seeing just how much the single-cell sequencing community got right, they can serve as a north star for how we build out our tools from here.

General data analysis

The data analysis related posts that I otherwise could not categorize.

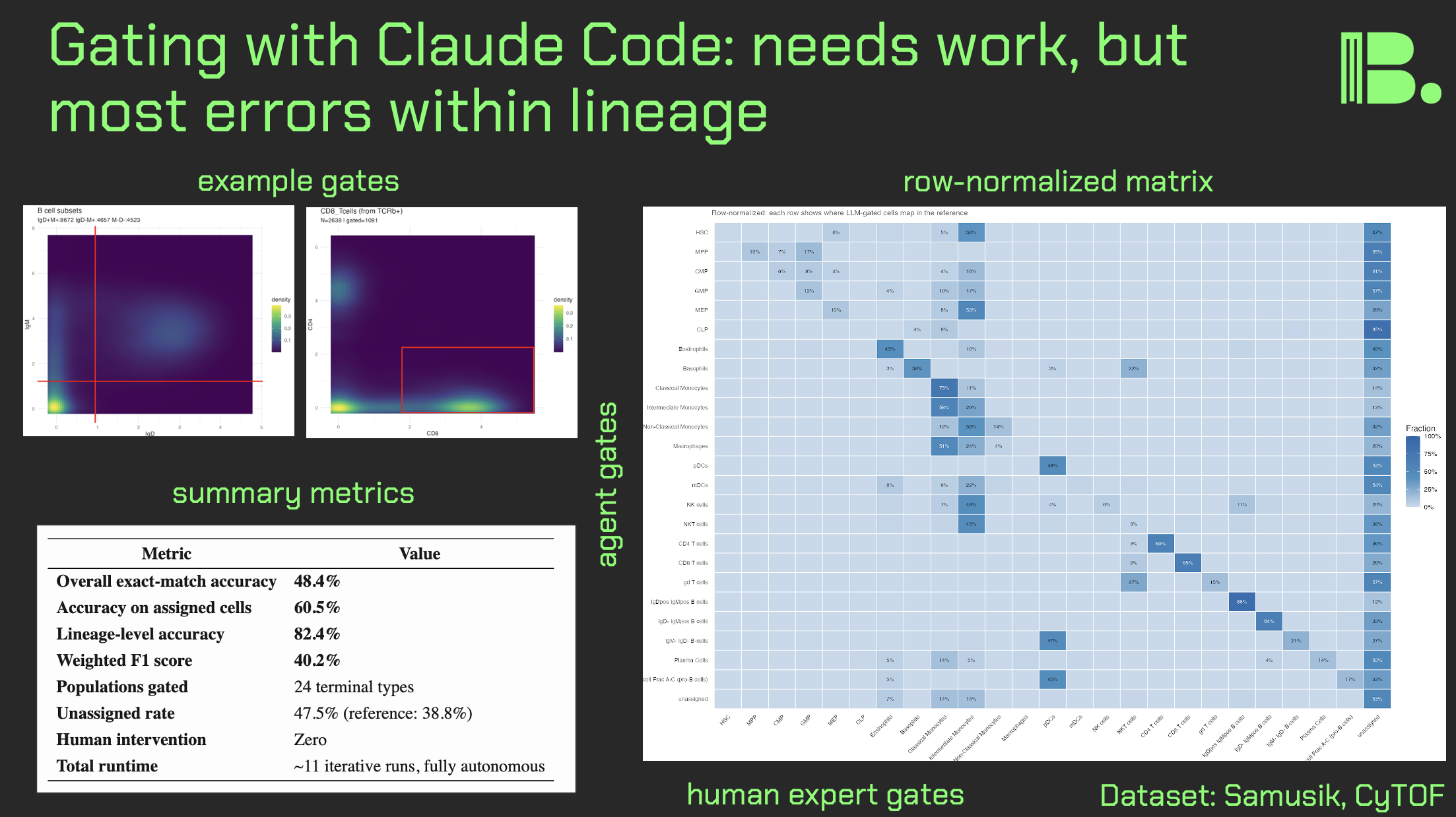

Claude Code experiments for manual gating

Hi friends, here is what happens when I context engineer Claude Code to do manual gating for CyTOF data.

As you know, there has been interest in automated gating for quite some time, as manual gating can be quite laborious (with obvious human intervention for "harder" populations). To this end, I tested agentic AI systems with the task, and iteratively improved them each run by tweaking the context engineering.

So you all can pick up where I left off, here is what I found.

Methods:

I used the Samusik01 dataset (around 80,000 mouse bone marrow cells run through CyTOF). This has an expert manual gating control built in that I was able to use for comparison.

I tried both ChatGPT codex and Claude Code, but later stuck with Claude Code because it was giving me much better results.

My "context engineering" was a series of documents in a directory that I "pointed" Claude Code to (e.g. please go to prompt[dot]md and follow everything there). Initially it was just a prompt, but later I added a pre-made gating strategy and a quality assurance document (e.g. do it again if >50% of cells are unlabeled) as well.

Results:

If we set the expert manual gates as ground truth, Claude Code got 1 in 2 correctly. If we look at mutually assigned cells (labeled by both the LLM and the human), its closer to 3 in 5. If we look at within-lineage accuracy of mutually assigned cells, it's over 4 in 5 (which makes sense: you wouldn't "oops" miscategorize a monocyte as pro-B cell).

Adding a quality assurance document that defined specific thresholds got Claude Code to work iteratively. In the final experiment, it iterated 11 times in a loop, adjusting thresholds.

If I add Figure S5 from the X-Shift paper (the visual gating strategy), we got another increase in accuracy. Note that Claude Code can read images, but it cannot yet read videos without breaking them down into a collage of frames (I tried).

There was per-population variance in how accurate the gates were, with lymphoid cells being gated more accurately than myeloid cells and stem/progenitors. This suggest population level tweaks that can be done.

The big picture, for decision makers:

These results are by no means perfect, but they show that there is a path. New models are coming that will in theory improve results, but we should optimize the context engineering now. Internal data will serve as a "moat" in this regard.

One future direction: currently, the gates are made in series in a giant R script. But if we were dealing with a real gating interface (e.g. a bespoke R script, or the API to a SAAS), then Claude Code might be able to adjust the gates in a more "natural" way.

The GitHub for the final experiment is in the comments. I hope you all have a great day.

comment

The GitHub to the most recent experiment is here: https://github.com/tjburns08/claude-cytof-gating

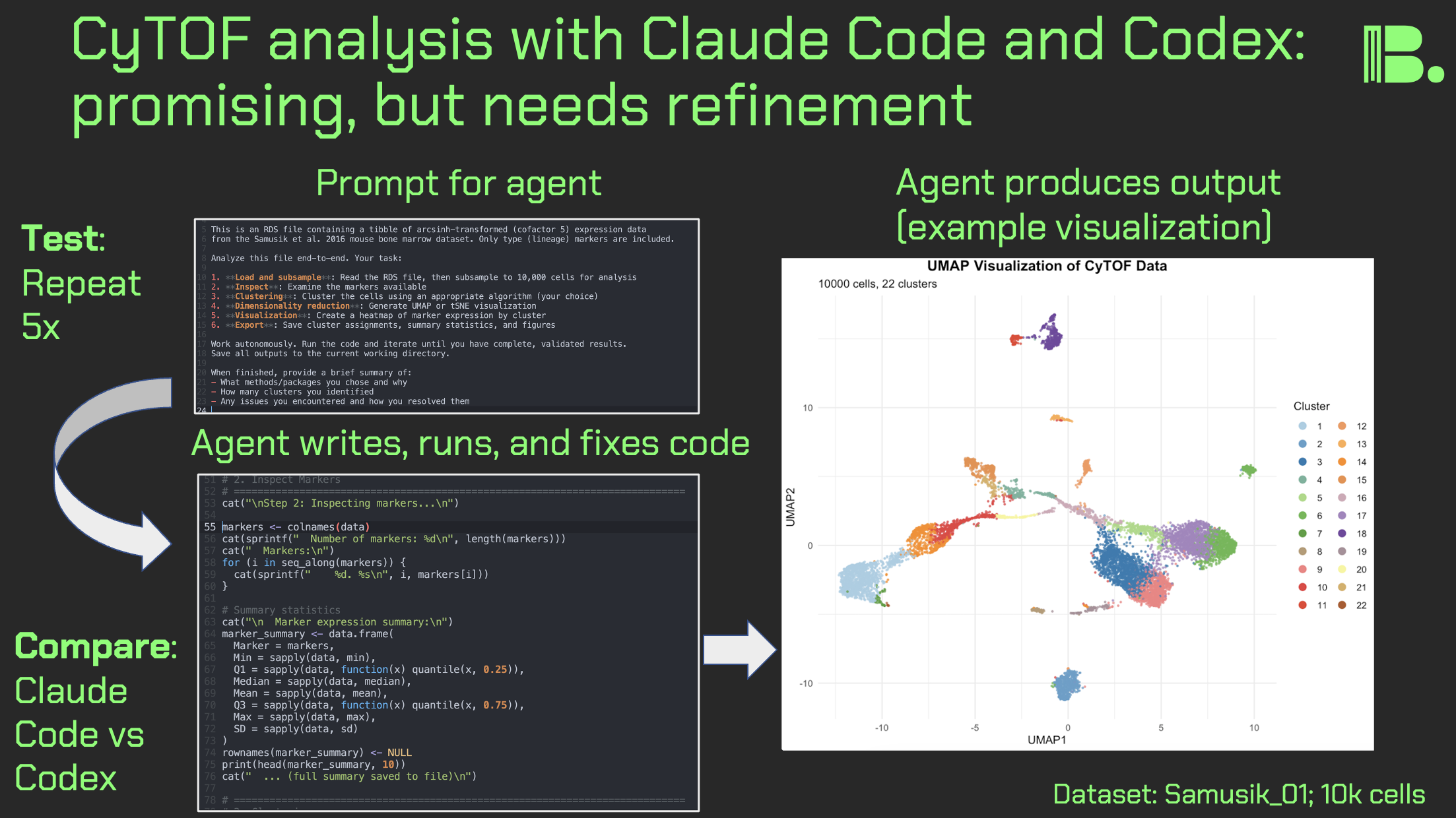

Agentic AI for analyzing CyTOF data

Hi friends, following my previous work on LLM design choices in CyTOF analysis, I re-ran the experiments with agentic coding tools: Claude Code and ChatGPT Codex Xhigh. I prompted each agent to create and execute a CyTOF analysis pipeline from scratch, five times per agent.

Key results:

Execution: All pipelines ran successfully for both agents.

Clustering: Claude Code favored FlowSOM (3/5) over kmeans (2/5). Codex was more varied: FlowSOM (2/5), kmeans (1/5), and PhenoGraph-like approaches via Seurat or igraph (2/5).

Dimensionality reduction: Both used UMAP exclusively, no t-SNE.

Output quality: Claude Code produced sensible UMAPs 5/5 times. Codex had one run that produced cluster IDs that looked like they were put in the blender on the UMAP, suggesting an undetected bug.

Other observations:

- Codex showed more defensive programming (e.g., TryCatch usage)

- Claude Code provides reasoning traces; Codex doesn't allow for that (though you can copy/paste from the command line interface to get this)

- Claude Code hits token limits much faster despite similar subscription pricing

Discussion:

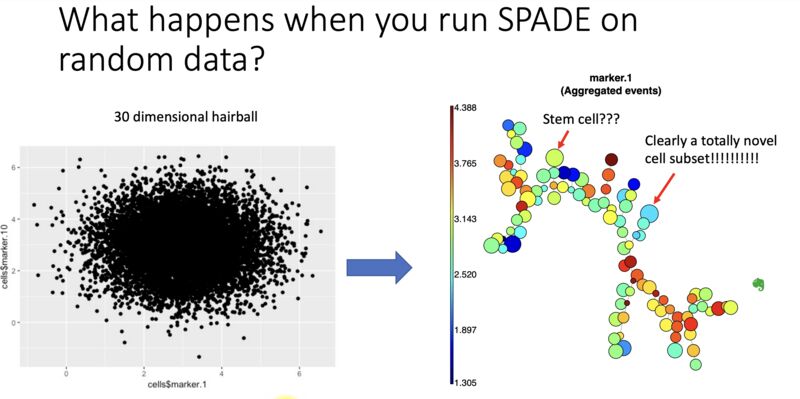

It's promising that these agents can write, execute, and debug code on the fly. Claude Code made more domain-appropriate choices (FlowSOM, not forcing data into Seurat), but I was surprised either touched kmeans, rarely used in CyTOF literature outside (in theory) deliberate overclustering workflows like SPADE.

It's interesting that Codex implemented KNN graph + Louvain (essentially PhenoGraph) rather than using PhenoGraph directly.

Key limitation of this work: one-file dataset. Future work will test larger, more complex data, perhaps pushing agents toward the likes of CATALYST or Spectre. Open questions remain: can an agent discover batch effects unprompted, or flag that "something isn't right"?

The community might benefit from comprehensive context engineering documents for different CyTOF analysis scenarios. I'm working on this now.

For leaders: These agents aren't going anywhere. We need best practices now: prompting toward domain-specific strategies, detecting errors, and keeping human experts engaged where it matters.

Link to the full results in comments. If you're running similar experiments, I'd love to hear from you. Thank you and I hope you all have a great day.

comments

One potential use case for this would be through the CyTOF SAAS products directly (not going to name them, but you know the popular ones). It might be via API access (assuming we figure out the cybersecurity), or that the SAAS products themselves embed the agents directly into the software. Either way, this is something I would watch out for, for programmers and non-programmers alike.

The GitHub link to the project is here: https://github.com/tjburns08/cytof_agentic_prompting_experiments

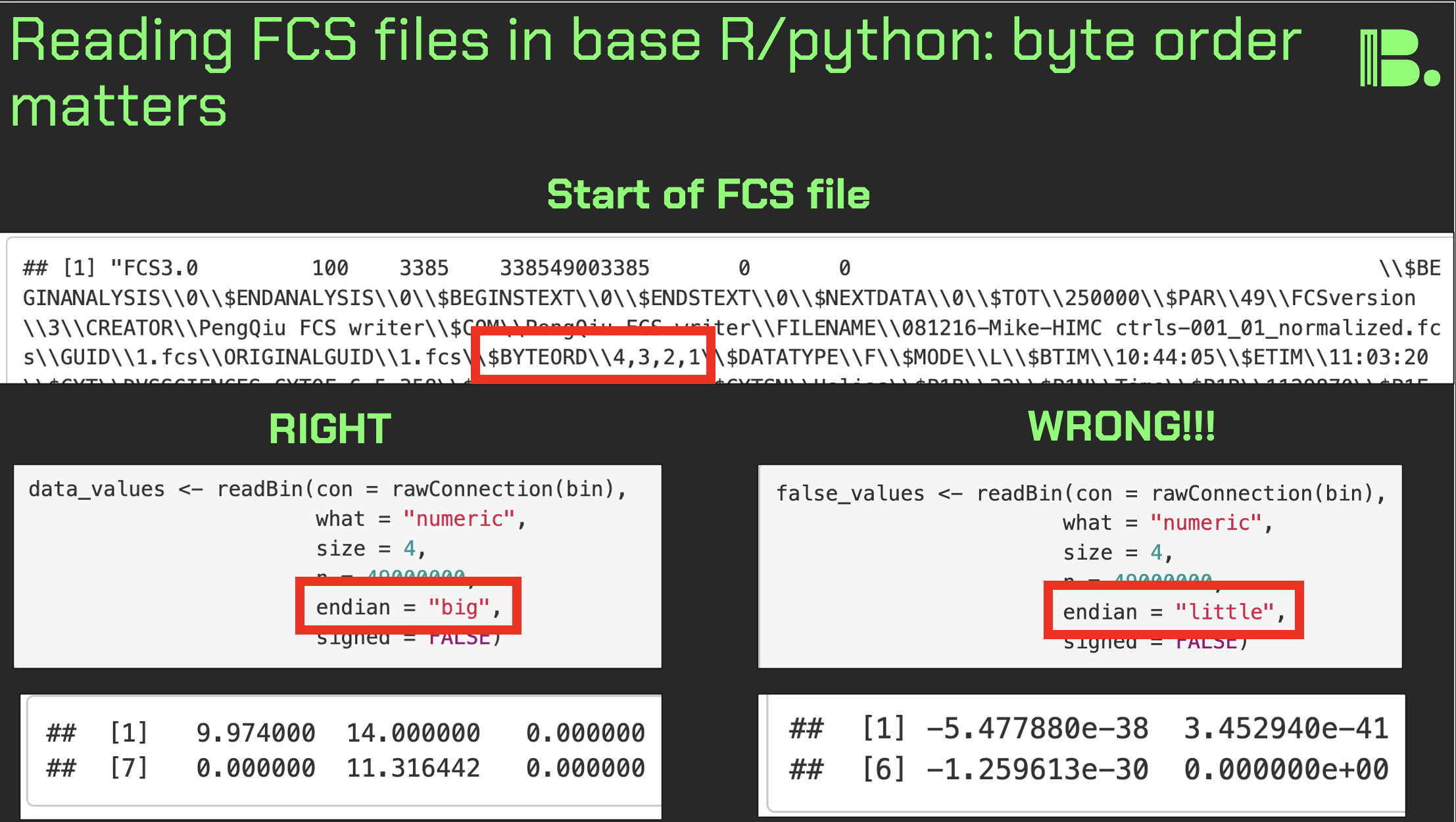

Byte order matters when reading in fcs files

Fcs files, outputted by flow and mass cytometers, are typically read into R or Python for analysis. If you're going to do this without the likes of FlowCore, you need to take care to read the bytes in the DATA section the right way, or the matrix will be gibberish.

Multi-byte numbers (e.g. 32-bit ints) can be stored in computer memory in two ways, known as little-endian or big-endian (simplified explanation below). The key thing here is that some computers do it one way, and some do it the other. And it might be that the flow cytometry acquisition computer is incompatible with yours.

There is a flag in the TEXT section called $BYTEORD, that will tell you which it is.

$BYTEORD/1,2,3,4/ is little-endian. This is where the least significant byte would be stored first.

$BYTEORD/4,3,2,1/ is big-endian. This is where the most significant byte would be stored first.

Here, most significant means highest "place" value. So in decimal, the number 135, would have 1 as the most significant digit (the hundreds place), and 5 as the least significant digit (the ones place).

Let's keep it simplified with standard decimal, and say the number 135 had to be stored into memory into three boxes [ ][ ][ ]. In big-endian, it would be stored as [1][3][5], which makes intuitive sense. But little-endian would have it stored as [5][3][1]. So now if your computer picks up [1][3][5] stored in big-endian, and assumes its little-endian, it will read it it as 531. And so it is with your flow cytometery data matrices.

When you store multi-byte numbers into memory, via the likes of readBin() in R, you can specify the byte order as a function argument, as per the $BYTEORD flag in the fcs file.

I made a tutorial a few years ago where I learned of this topic (link in the comments below). If you follow it, you can convert a fcs file into a data matrix without any software packages.

The image associated with this post summarizes the binary reading step, where you select endian-ness, and what it looks like if you select the wrong one.

Why does this matter? Because at least in R, FlowCore::read.FCS(), is a bit of a choke point. It's incredibly useful and I'm grateful on behalf of the community to have it. But it's also a single point of failure. So it would behoove you to know how to read in your fcs files independently, just in case something were to happen to it or its dependencies (and the python programmers among you will know what I'm talking about).

I'm revisiting this now due to a low level project I'm currently working on, but I think that any bioinformatician should know their craft one level of abstraction deeper than what is required of them. It pays dividends long term.

Leaders, look for these choke points in your workflows and prepare for the worst, just in case.

Thank you, and I hope you all have a great day.

Louvain versus Leiden cluster stability in Seurat

You may have noticed the emergence of Leiden clustering (as opposed to Louvain clustering) a few years ago in single-cell analysis. If you are using Seurat, be careful if you decide to transition…

Louvain and Leiden clustering are both community detection algorithms on (in Seurat's particular case) a shared nearest neighbor graph of your data. The Leiden algorithm, in 2019, added additional refinements upon the shoulders of Louvain. In theory, making the switch could improve your clustering schemes.

But in practice…

When I switched from Seurat's implementation of Louvain to Leiden using the default settings of the classic Guided Clustering Tutorial on the PBMC 3k dataset, across 20 random seeds, Leiden did not detect the platelets that Louvain easily detects.

This suggested that the given clustering parameters worked for Louvain, but needed to be re-tweaked for Leiden.

The image below is this initial output, with cluster centroids being visualized in a gif across the 20 runs, a visual way I developed to assess cluster stability (hence the jiggling of the yellow dots).

Accordingly, when I increased the resolution, Leiden detected the platelets, but the cluster stability got worse in the T cells.

Lowering the number of nearest neighbors from the default 20 to 10 also got Leiden to detect the platelets with decent stability, but I also got an extra cluster in the CD4 T compartment, similar to the one that "flickers" in on the left panel below. Note that the extra cluster is not a bug, just the result of that particular setting, which may well be a defensible subset.

So there is still a bit of tweaking left to do on my end. And I'd expect you'll have to do the same on your end.

In short, if you switch from Louvain to Leiden specifically in Seurat, don't assume that you can use the same default parameters for both.

In contrast, Scanpy's clustering is Leiden-centric, so its defaults may work better with Leiden than with Louvain.

And more generally speaking, never assume the default parameters are the right ones, for whatever you're doing!

Thank you and I hope you all have a great day.

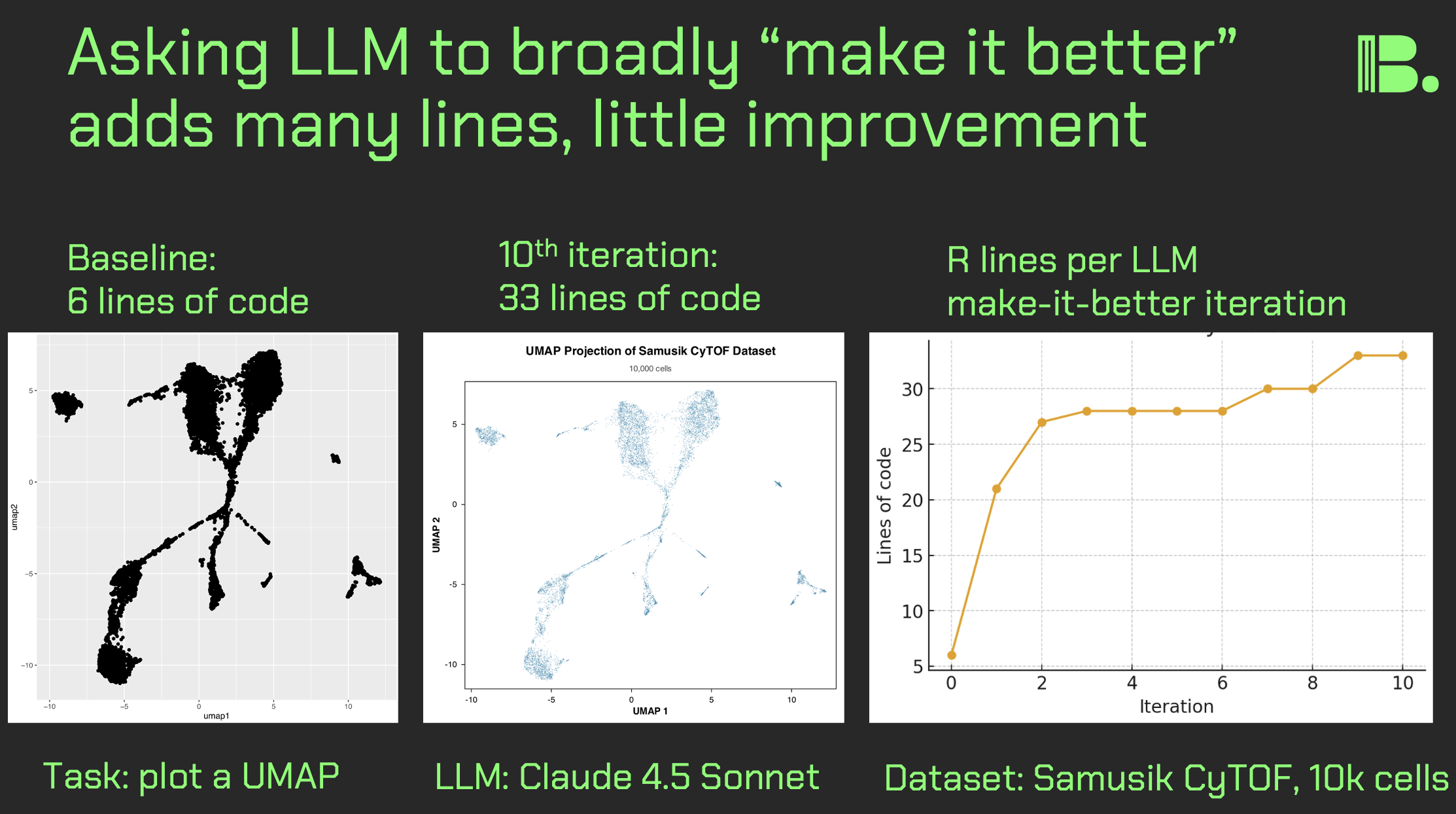

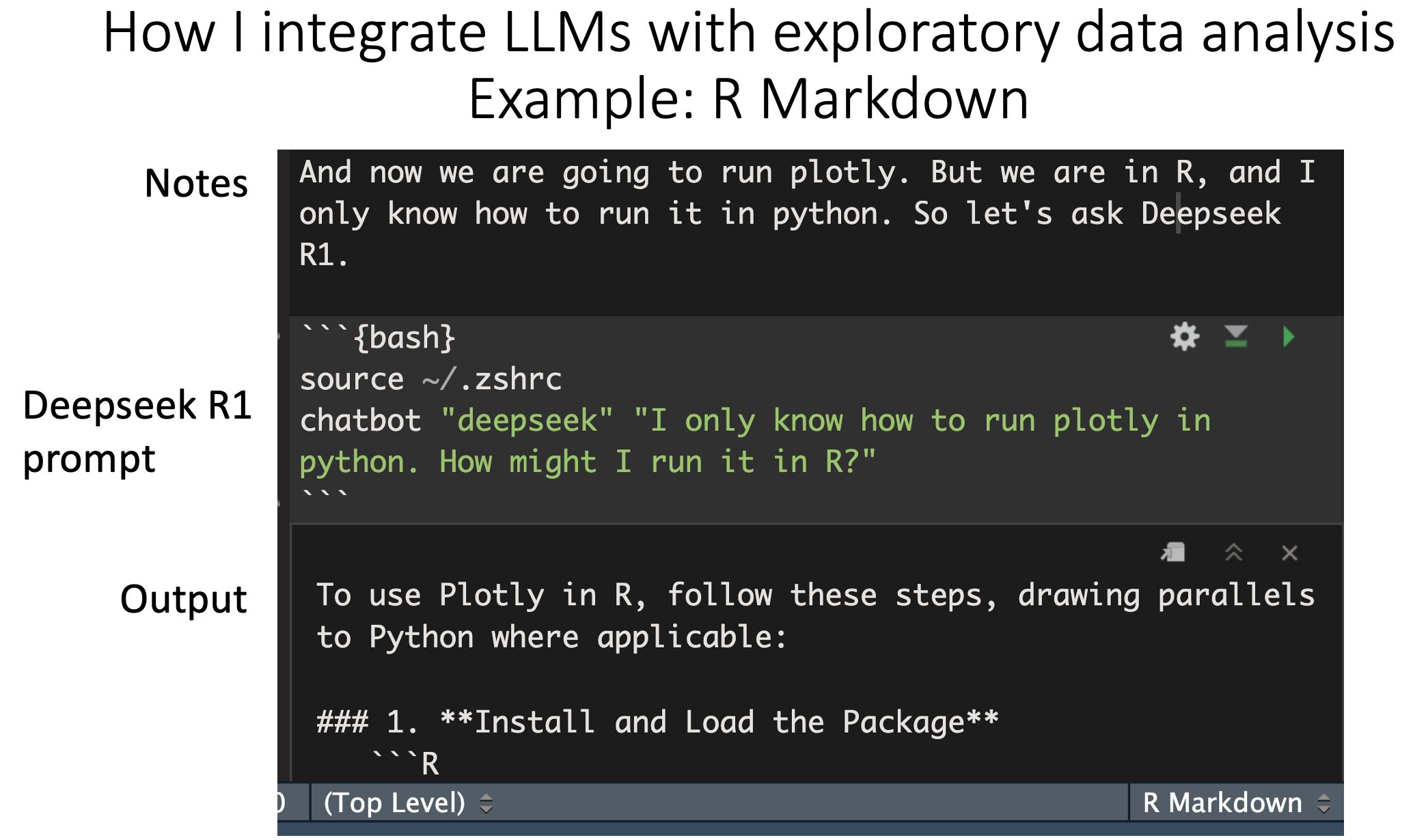

LLM make-it-better experiment for UMAP plotting

Can you use LLM's to create self-refactoring code? Here, I broadly asked a LLM to take six lines of R code plotting a UMAP and iteratively "make it better." What I found was that the number of lines of code go way up, but the plot itself does not change much.

I hypothesized going in that at least density would be added, but rather the simple aesthetics around the points, background, and labeling changed, and the dots got much smaller.