Learning how to code improved how I think

The knowledge of which geometry aims is the knowledge of the eternal.

Plato, The Republic, Book VII, 52.

The purpose of computing is insight, not numbers.

Richard Hamming, Numerical Methods for Scientists and Engineers

Computer science improved my thinking and my focus

I have always been intellectually minded and curious. But one challenge I faced was that I was a classic ADHD kid, who went the path of refusing to take medication. I was easily distracted, and often had racing thoughts that were all over the place. When I found computer science in my late 20s, I discovered that the act of coding had a calming effect on my brain. I could focus on one thing for several hours. Furthermore, when I applied the computational lens to the rest of my life, that calming effect would remain. I attribute computer science to a good chunk of my success at the end of grad school, and my years after graduation, which involved starting a business that I still run at the time of writing.

In short, aside from the practical skills that computer science gave me, computer science helped me organize my thoughts and actions. It gave me a framework from which I could reason and do things in a calm and foucused way. I am not alone in this discovery. There is a whole discipline called computational thinking that is aiming to, among other things, teach people, especially kids, the fundamental concepts and practices within computer science. Accordingly, I think that this computational thinking is something that everyone should get exposure to. Why does this matter? Because it could very well be that in the next few years, generative AI renders human-based coding obsolete at the practical level. If this is the case, I will continue coding and teaching people how to code. Why? Because learning how to code improved how I think.

Coding involves the scientific method, done really fast

We all learned the scientific method in school.

- Ask a question

- Do some research as to what is known already

- Form a hypothesis

- Run an experiment to test the hypothesis

- Analyze the data

- Form a conclusion

- For each follow up question, go to step 1.

The conclusion leads to follow-up questions and you're at the top of the loop again. Importantly, in my experience, my hypothesis is partially or fully wrong quite often. Each time I'm wrong, my intuition around how the work works improves. Furthermore, I get a chance to do a mental stack trace to see if I can locate the fallacies in my thinking that led to the wrong hypothesis. Sometimes, it's simply that biology is complicated. Often, I didn't read up enough on Gene X, or some sort of cognitive bias (wishful thinking, because I want to get the high-end publication) clouds my judgment. In this regard, through repeated experiments, where I put my hypothesis on the line, I become a better thinker.

Now, in my little corner of biology, step 4 and step 5 would both take anywhere from days to months. If you're developing a mouse model from scratch, one experiment could take years. If one of your goals is to become better at thinking, then this can really slow you down.

When I started pursuing computer science halfway through graduate school, I was surprised at how much this process sped up. When I was de-bugging code, I would run experiments at the rate of 1 or more per minute. Often for several hours. Again, each experiment where I was wrong, each error message, gave me the opportunity to become a better thinker. I learned early on to love the error message. You spend a huge chunk of time, upwards of half of your time, de-bugging code.

There is a level above that of the rapid-fire experiments improving your ability to think via the scientific method. The higher level is the fact that you're not trying to understand billions of years of evolution. You're trying to understand code that you wrote. You converted your logical thoughts into computer language, and then the computer gave you an error message. Your mental model about how this was going to work was wrong. So when you find the bug, you have located an error in your thinking. Then you learn from it. Then you become a better thinker.

Computer science makes explicit important concepts that are hard to put in words

Here, I will give you a flavor of the various concepts that I came across when learning how to code that I had seen or known before in some form, but not in a way that was as clearly stated as it was through code. In other words, computer science game me a language through which I could reason about concepts that would otherwise be difficult to talk about. The examples below are by no means exhaustive. They are just meant to show you things that I came across in my journey that I found to be somewhere between really cool and really relevant to the types of things I'm working on.

Emergence and cellular automata

The concept of emergent complexity or emergence, which any biologist or chemist will find to be intutive, can be examined in a raw form with computer science, using (among other things) cellular automata. Cellualar automata are models of computation where you take a simple set of rules and run them, which produces emergent complexity. Some cellular automata are more elaborate than others despite having a rule set of similar complexity. Want an example: how about Conway's Game of Life. Take a grid. Each square on the grid can either be live or dead. For each square in the grid, follow these rules:

- Any live cell with fewer than two neighbors dies.

- Any live cell with two or three neighbors lives.

- Any live cell with more than three neighbors dies.

- Any dead cell with three neighbors becomes a live cell.

That's it. Run it and you can get incredible patterns. If you're curious, have a look here in the Life Wiki at the various patterns that have been found. Running Conway's Game of Life is the computational equivalent of looking at a drop of pondwater under the microscope. Included in these patterns is a Turing machine, a common model for a computer used in theoretical computer science. The picture of a Turing machine below, implemented in Conway's Game of Life, is from the respective page in Life Wiki.

In other words, Conway's game of life is Turing complete, meaning that any form of computation that exists, from logic gates to Tetris to ChatGPT, is theoretically implementable using only Conway's game of life patterns (inefficient, but possible).

I first came across Conway's Game of Life when I was 16, there was a sort of universe-ness that was totally maxed out. It was the first time where I could conceive of our universe being made up of something like this at the very bottom. Even if it wasn't, I got the idea in my head that emergent complexity (which is perhaps the -ness that is being maxed out here) could give rise to way more than I had ever thought.

It wasn't until I was 28 and was learning how to code that I had this feeling again, and I knew I was going to pursue it for as long as I possibly could.

Levels of abstraction

Email is a wonderful thing for people whose role in life is to be on top of things. But not for me; my role is to be on the bottom of things.

Donald Knuth, from Email (let's drop the hyphen)

My first computer science class was in Java. My second one was in C++. These are lower-level langugaes as compred to R and Python, the two languages that I use these days. It was through programming that I really solidified the concept of levels of analysis. We all have a general idea of what it is, like the xkcd comic here. That pschology is just applied biology is just applied chemistry is just applied physics, etc. I'll add that that by saying that as a biologist, the best biologists I know are actually chemists in disguise.

In terms of computer science, we have what are broadly called low-level languages and high-level languages. These terms are relative to your programming language of choice. If we look at the printing of "hello world" in Python for example, it looks like this:

print("hello world")

That's it. A single command. Then you run it. Then you get "hello world" on the console. But there's a ton of stuff that happens under the surface. To give you an idea of what that looks like, let's go with a lower level language. C.

#include <stdio.h> int main() { printf("hello world"); return 0; }

So here, we need to include a library that allows us to do input/output things, which gives us the function printf. It's not just built into the language. We have to end each line with a semicolon. We have int main() which is our main function that must be called to run the thing. We're declaring the type of thing that the function returns. In this case an integer.

This brings us to the point that in C (and many other languages) you have to declare the type of object you're using. So if you have a variable x you want to set to 5, you have to say int x = 5, whereas in python you'd say x = 5. And you need a statement that the function returns. In this case 0, which by convention terminates the program. So you're also telling the computer when it terminates. It doesn't just figure it out. So there's a lot more you have to keep track of. And if you're just trying to analyze some data, it's way more convenient for the computer to sweep it under the rug.

There's a whole other piece here that I'm not going to talk about for the sake of brevity: while R and python are interactive, where you can simply type things in and they run automatically, C and other lower level languages are entirely compiled. Rather than programming interactively, you have to compile it first, or convert it into the binary machine code that will be understood by the computer's hardware. This requires the use of a compiler to turn your C file into an executable binary file, which is then read by the computer, which only then produces "hello world."

But this is just the top of the rabbit hole. If you really want to know what's going on, let's look at an even lower level language: Assembly. This is the language underneath C and everything else (save machine code). If you code in python, then C is a lower level language. If you code in assembly (which is very rare these days), then C is a higher level language. So I'm going to give you the Assembly code for printing out hello world for the ARM64 chip, which my current computer runs. This is the first point: when you're coding in Assembly, you're dealing with a different language for each chip. Now, there's a lot going on below, so if you want a better explanation from someone who actually codes in assembly, please watch this video by Chris Hay, which gets credit for the code and the explanation below.

// hello world

.global _start

.align 2

// main

_start:

b _printf

b _terminate

_printf:

mov X0, #1 // stdout

adr X1, helloworld // address of hello world string

mov X2, #12 // length of hello world

mov X16, #4 // write to stdout

svc 0 // syscall

_terminate:

mov X0, #0 // return 0

mov X16, #1 // terminate

svc 0 // syscall

// Hello world string

helloworld: .ascii "hello world\n"

Ok, so what is going on here? Now we're giving that computer direct, low-level commands to the processor. Let's focus on what's going on underneath my comment "//main." Without going into a larger discussion around computer architecture, we'll summarize the procedure. You are in no way supposed to fully get what's going on here. You're just supposed to understand that there's a lot that happens under the hood. With that in mind, read on.

We first have to prepare the computer to output "hello world." In the _printf function, we're going to set the output stream (stdout) in the register (CPU memory slot) X0. Then we're going to create a memory address for our string, which we're naming "helloworld" and store the address (not the string, just the place in memory that will hold the string) in register X1. Then we're going to tell the computer the length of our string of interest (count the number of characters, including whitespace, plus the newline character), which is 12 characters, which is 12 bytes, and store that in register X2. In X16, we're going to place the instruction to write to stdout. Then we call svc 0, which actually requests the operating system to execute _printf.

Then, we have to tell the computer to terminate the program, which is the _terminate function that we define. The equivalent of return 0 from C is moving the NULL command into register X0. This means that the program executed successfully. Then we move the exit command into X16, where we previously were holding the "write to stdout" command. Then we call svc 0 again, which requests the operating system execuite _terminate after displaying "hello world."

Then, like C, there's a song and dance that converts this instruction set into binary machine code that the computer can read, and then it can actually output "hello world." And then we're done.

So I'm going to cut and paste the python code from above to remind you the sheer volume of things that are swept under the rug:

print("hello world")

These are the levels abstraction. We started with a discussion of these levels of abstraction from psychology to physics. Then we moved to the equivalent in computer science. What you learn in computer science in real time is that understanding what's going on at least one level above and below what you're doing makes you a much better programmer.

What do I mean by that? If I run into a bug in python or R, the issue could very well be a lower level issue, the same way that treating disease has you working with chemistry (eg. small molecule drug development) to treat a problem in biology. In other words, solving hard problems involves seamlessly moving up and down levels of abstraction in, whatever your domain is. So you better be well-versed in the levels of abstraction above and below what you're doing.

Hacking

Given our discussion on levels of abstraction, quite a lot of so-called hacking (both security hacking and innovation) works by means of understanding things one or more levels underneath what you're doing. A much larger discussion of this can be found from this amazing article written by Gwern that I've read many times (and have since written about). But let me paste the punchline, as food for thought:

In each case, the fundamental principle is that the hacker asks: “here I have a system W, which pretends to be made out of a few Xs; however, it is really made out of many Y, which form an entirely different system, Z; I will now proceed to ignore the X and understand how Z works, so I may use the Y to thereby change W however I like”.

In other words, the hacker looks at a thing, and realizes that the thing is merely an abstraction made out of atoms or bits or whatever other low-level object, and it's just a matter of moving those bits/atoms around in a particular way, and they get what they want. I'll paste another bit from Gwern's article to really solidify this.

In hacking, a computer pretends to be made out of things like ‘buffers’ and ‘lists’ and ‘objects’ with rich meaningful semantics, but really, it’s just made out of bits which mean nothing and only accidentally can be interpreted as things like ‘web browsers’ or ‘passwords’, and if you move some bits around and rewrite these other bits in a particular order and read one string of bits in a different way, now you have bypassed the password.

There is one more insight here that I have to continually remind myself over and over: in a competitive activity, you have to both be excellent at the thing (aka do the work) and know the hacks. This can be exemplified with speedrunning, which is a hobby in video gaming where you try to beat a game as fast as possible. Here, you can't just do a hack and call it a day (everyone is looking for the "hack" these days). From Gwern:

In speed running (particularly TASes), a video game pretends to be made out of things like ‘walls’ and ‘speed limits’ and ‘levels which must be completed in a particular order’, but it’s really again just made out of bits and memory locations, and messing with them in particular ways, such as deliberately overloading the RAM to cause memory allocation errors, can give you infinite ‘velocity’ or shift you into alternate coordinate systems in the true physics, allowing enormous movements in the supposed map, giving shortcuts to the ‘end’ of the game.

To get a feel for this, have a look at this history of Mario Wonder speedrunning (which includes info about speed runs in other video games). Someone learns some exploit that the game designers did not anticipate, then everyone is doing that exploit with maximal skill with the character, and then someone learns a new exploit, and the cycle continues. So you have to know both the hacks (be able to operate at lower levels) and have maximum talent (be able to operate at higher levels). Put differently, a biologist needs to know chemistry, but also needs to be a really good biologist.

Taken together, in terms of being a better thinker, it's good to know how things work at least one level under whatever you're doing. I'm not the first to say this by any means. Are you a biologist, at least be familiar with if not competent in chemistry. Are you a python programmer, at least be familiar with if not competent in C. Broadly learn how things work (which is really just another way of saying to look at a thing at a level of abstraction below wherever you're at).

Coding really solidifies this concept and teaches you what it feels like to think at a high level (program in python) versus to think at a low level (program in C or Assembly), and the value of both. Again, I primarily use R and python, but being familiar with the lower level languages too, and the thinking habits they have taught me, has paid off many times over.

Recursion

Computer science gives us data structures and algorithms that don't come easy to standard spoken language. One of these concepts is recursion. In recursion, you're defining a function where the function is executed in the function definition. Ok, that's a mouthful. Let's try again. What is recursion?

def factorial(x): if x < 2: return 1 else: return x * factorial(x - 1)

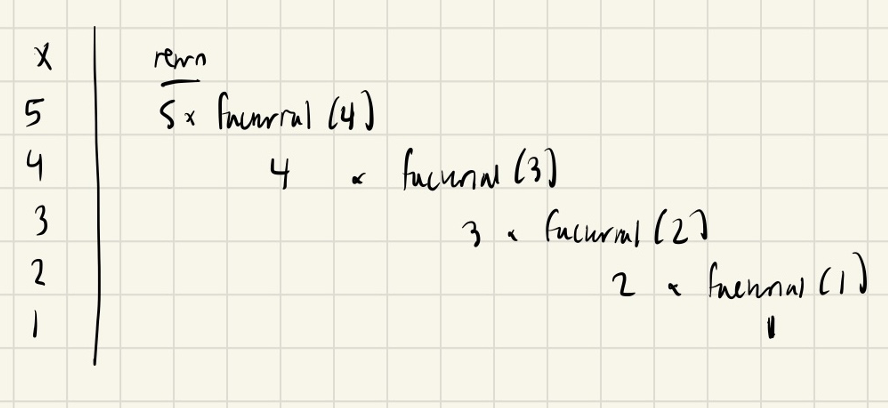

Still a bit mind-bending if you've never seen this before. If this is new to you, get out some paper and draw out the procedure for factorial(5), treating the above as a recipe. Try drawing it out before you look at my sketch below. Ok, now here is my sketch:

Want another example? Just do a Google search on recursion, and they give you the following joke:

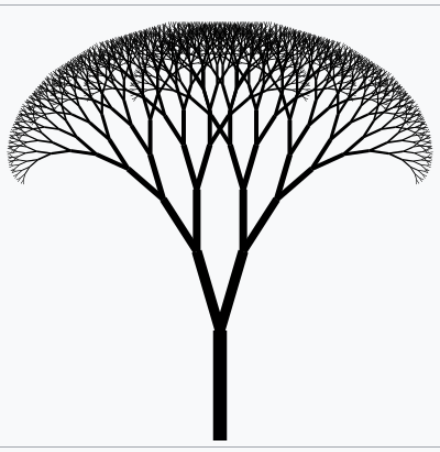

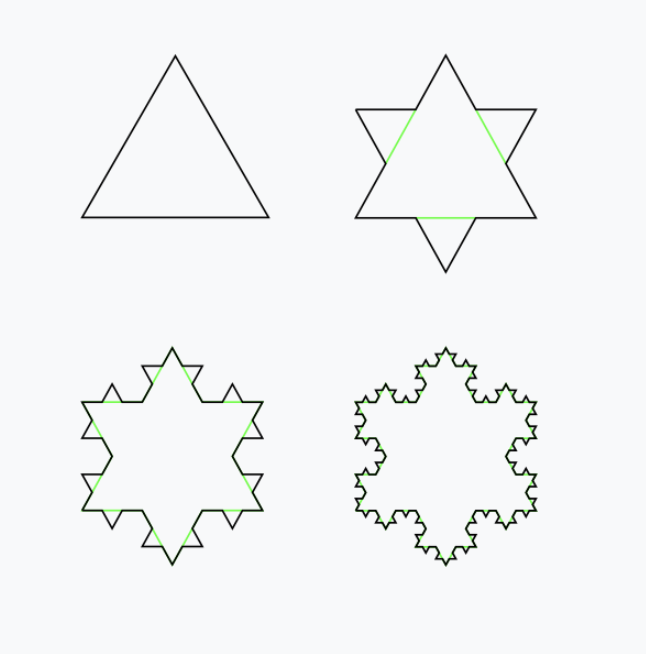

But where does this mind bending concept show up in the world? All over the place. One example we have all seen is the concept of fractals. A lot of fractals involve making a shape, like a line that bifurcates (forms two branches). And then a rule that says at the tip of each branch, bifurcate again. And at the tip of those branches, bifurcate again. So you get something like this image, from the Wikipedia article on "fractal canopy" (image by Claudio Rocchini, licensed under CC BY-SA 3.0):

And where do we see this in the real world? How about snowflakes? One way a snowflake can be modeled is by starting with an equilateral triangle, and then at the center of each line, creating a smaller equilateral triangle. And then at the center of these lines, creating a smaller equilateral triangle. This is called a Koch snowflake, and from an image from the linked Wikpedia article on it, you can get a feel for how this works (image by Wxs, licensed under CC BY-SA 3.0):

In biology, there are examples of recursion that show up in the strangest places, like romanesco broccoli. I saw this for the first time at my college dorm cafeteria my sophomore year, before I knew anything about recursion, and I was transfixed because I knew there was something special going on here that I couldn't quite put into words. Now the concept of recursion allows me to put it into words, just as so much of computational thinking has given words to things I couldn't otherwise make explicit. From the linked Wikipedia article above, here is what I saw in Stanford's Wilbur Hall cafeteria (image by Ivar Leidus, licensed under CC BY-SA 4.0):

You can see a pattern, in which at each point, a smaller instance of the same pattern is being constructed. The pattern is being defined within the pattern. Just like in the factorial code example from earlier, where the function is being defined within the function.

There's a fantastic book called Gödel, Escher, Bach by Douglas Hofstadter. A key theme in the book is recursion [1]: these functions that talk about themselves. He uses this self reference to explain Gödel's Incompleteness Theorems. This is a rabbit hole worth another article or several, but in a nutshell, he shows that formal systems (eg. math, language) start to break down if you get them to talk about themselves.

Want an example? Evaluate the truth of:

The following sentence is true.

The preceeding sentence is false.

If the second sentence is true, then the first sentence is false, but the first sentence says that the second sentence is true, which would in turn make the second sentence false, but if the second sentence is false then the first sentence is true. Um…what? Anyway, this is a small but important sliver of the context around Gödel's Incompleteness Theorems, one of the most important contributions to mathematics in human history.

If all of this seems a bit mind bending, it is because it is. I first came across this when I attempted to read Gödel, Escher, Bach for the first time when I was a teenager. Most of it went over my head, but this sentence pair above stuck around in my head for decades.

It wasn't until I read the book after I had taken my computer science courses and was coding daily for work that I could finally wrap my head and these things. The concept of self reference in formal systems, and the concept of recursion is hard to grasp, but it runs very deep, shows up across many domains of study, and it is absolutely worth learning. How do you learn it? By a combination of looking at examples, and importantly, learning how to code and solving problems that require you to write recursive programs.

If you don't get this stuff right away, don't worry about it. Look at the images in this section, and let them sink in for a few days or months. Even when I was actvely learning and focusing on recursion (in my second computer science class, CS106B), it still took me a month or two before the concept really sunk in. And that's ok.

Graphs

Ok, how about a practical example for biologists. What is a cell signaling pathway? Well, to massively oversimply, you have messages being passed from protein to protein all the way down to the DNA where some sort of effector (eg. a transcription factor) does a thing to the DNA. What if you wanted to model that? How would you do it? Well, in computer science (and discrete math) there is a data structure called a graph that allows for one to wire up a pathway in silico. This is a graph as in a mathematical abstraction of a network, not to be confused with a biaxial plot.

Here's what the graph representation of a piece of a pathway looks like in base python, using a dictionary (again, confusing wording…it's a look-up table):

graph = { 'RAS':'RAF', 'RAF':'MEK', 'MEK':'MAPK', 'MAPK':['MNK', 'RSK', 'MYC'] }

So now let's wire one up. Ok, done. What do I get from that? Well, one very fundamental question in graph theory is what are the "central" regions of a graph? This is called centrality. Degree centrality tells us how many friends each node has. Betweenness centrality tells us what regions in the network have the most shortest paths that run through them.

Think of the Bay Bridge from Oakland to San Franscisco. Commuters know that, minus traffic, that is the quickest path to San Francisco for a lot of the East Bay and beyond. The Bay Bridge would have a high betweenness centrality. But with this metric you can quantify that and compare it to the San Mateo bridge to the south.

Such is the same with signaling pathways. Assuming you have a good dataset, you can start interrogating these pathways in terms of regions that are relevant to whatever your intent is. How do I know this? I spent three years doing just this for a client of mine. The use case is simple (though the implementation is complicated): can we find druggable regions of the network that will lead to the change that we want given the intent of the company? It would have been very hard, if not impossible, to do this kind of work without the intuition and use of a graph.

When you start thinking in terms of graphs and using graphs as part of your problem solving toolkit, difficult "systems level" problems in biology, like those around signaling pathways, start to become more actionable.

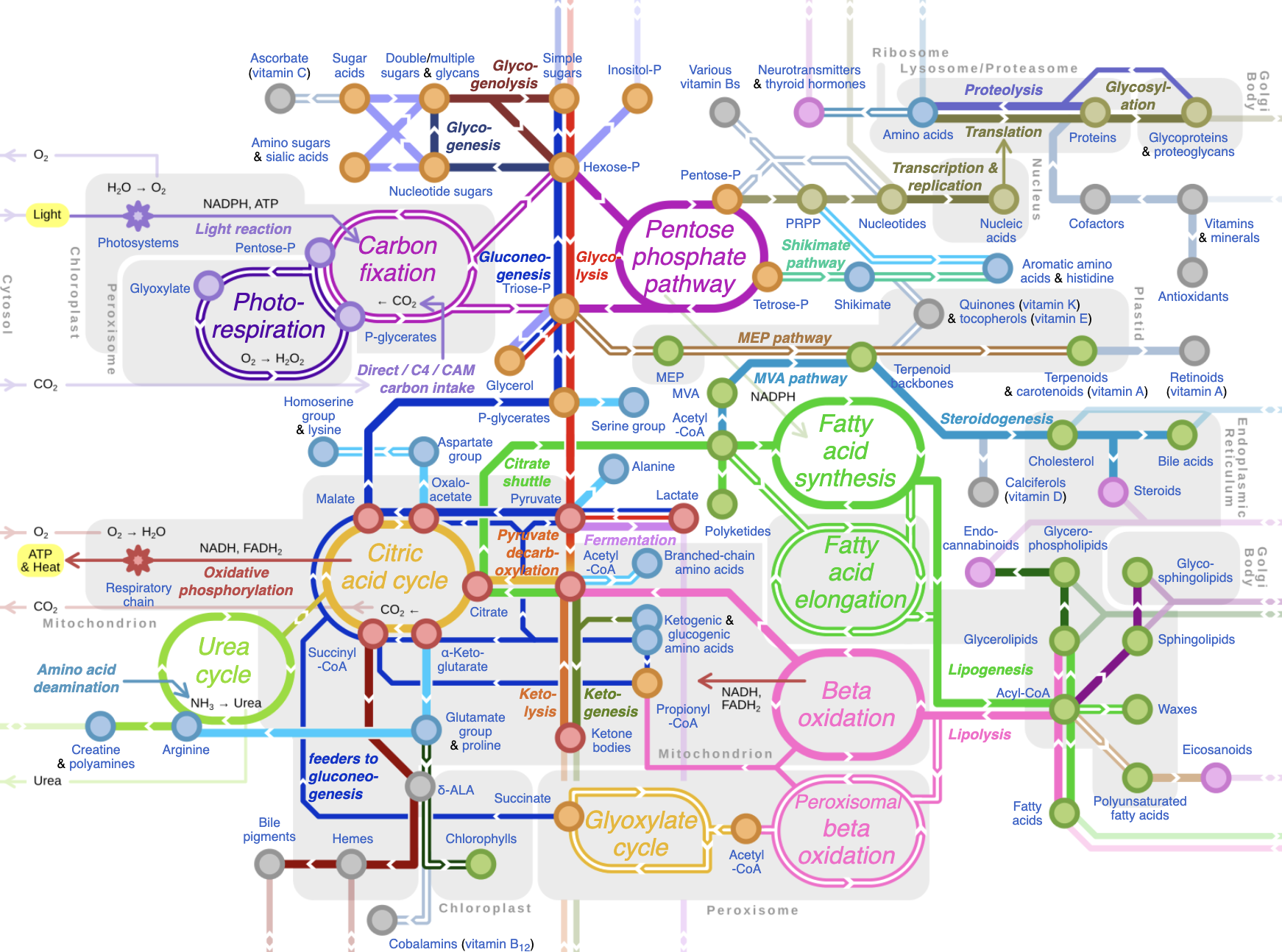

As an example of complicated signaling pathways, anyone who studies biochemistry ultimately comes to the realization that we can't wrap our heads around every little intricate detail of cellular metabolism, aside from perhaps the memorization of the high-level pathways like the Citric Acid Cycle which everyone does in their intro bio classes. But the reality of cellular metabolism looks more this like image below, taken from the Wikipedia article on metabolic pathways (image by Chakazul):

Note that this is a map not of the pathway, but a map where the nodes are metabolites and the edges are individual pathways. In other words, to look at what each metabolite gets converted to in each pathway, this map gets much, much more complicated. So then when you get to this level and you want to understand what nodes and connections are more inflential, and what happens in theory when something is perturbed, it becomes a problem for computers. And if you are well versed in computational thinking, then this becomes a doable task [2].

Putting it all together, exemplified by Joscha Bach

Here, we will tie a lot of what we've been talking about above together with the concept of computational thinking. This is something that has been previously described by computer science professor Jeanette Wing in a 2006 essay arguing that it should be a core thing that everyone learns (which I agree with).

The earlier sections of this essay, going into the basic computer science concepts that can help people reason about the world and solve problems, are instances of computational thinking that I think are widely applicable. What I'm going to do in this section is provide an example of a popular AI and cogntive science thought leader who embodies the concept of computatioanl thinking better than anyone I have listened to: Joscha Bach.

When I first heard Joscha Bach on a podcast with Lex Fridman many years ago, I had no idea who he was and how he thought. I think I had randomly stumbled upon it from some reddit thread of "best Lex podcasts." Anyway, I resonated deeply with the way he inherently thinks. It was something of a mindset he embodied that had contributed to my successes in the second half of grad school and on into the building of my business, and my ability to reason about the world in general.

Whatever he's talking about, he translates it into data structures, algorithms, and the stuff of the previous sections of this essay and then attempts to explain them through that lens, not in the sense of "explaining away" but in the sense of grounding these concepts computationally in order to reveal novel lines of inquiry you would not otherwise see. Let me give you a simple example of how he thinks so you can get a feel for what this sounds like, from his podcast with Lex Fridman that I linked above:

“An organism is not a collection of cells; it’s a function that tells cells how to behave. And this function is not implemented as some kind of supernatural thing, like some morphogenetic field, it is an emergent result of the interactions of each cell with each other cell.”

Note the concepts. We have emergence, from my section on Conway's Game of Life, and we have functions, which are from the section on levels of abstraction. We could also come to the insight that we need to reason about the interaction at the cellular level if we're thinking in terms of the "graph" data structure also talked about earlier. Ok, now that you have a bite-sized piece of what Joscha is all about, I'll give you the full quote, so you can see the full brunt of the computational lens:

For me a very interesting discovery in the last year was the word spirit—because I realized that what “spirit” actually means: It’s an operating system for an autonomous robot. And when the word was invented, people needed this word, but they didn’t have robots that built themselves yet; the only autonomous robots that were known were people, animals, plants, ecosystems, cities and so on. And they all had spirits. And it makes sense to say that a plant is an operating system, right? If you pinch the plant in one area, then it’s going to have repercussions throughout the plant. Everything in the plant is in some sense connected into some global aesthetics, like in other organisms. An organism is not a collection of cells; it’s a function that tells cells how to behave. And this function is not implemented as some kind of supernatural thing, like some morphogenetic field, it is an emergent result of the interactions of each cell with each other cell.

So we're…redefining the concept of spirit in terms of…operating systems. So the operating system of a forest (the coordinated computations that run through it) is the spirit of the forest. And so on. This is pretty deep stuff, and I'll admit that as computationally minded as I am, I did not combine the word "spirit" and "operating system" in the same system in my head until I heard Joscha Bach spell it out. Then this is the prelude to thinking of organisms as functions that take cells as input, ultimately leading to organismal behavior, which is part of the operating system that is the spirit we know as the biosphere.

I'm not going to set here and evaluate the truth of any of this. But what I can say is that this computational redefinition of organisms and spirits is absolutely thought-provoking, and could very well lead to novel lines of inquiry that could lead to interesting testable hypotheses that no one would have otherwise come up with. Importantly, it allows us to reason around old concepts like "spirits" that are often otherwise disregarded by biologists. Taken together, the computational lens may allow for researchers to uncover insights and connections that were otherwise overlooked by the current lenses through which scientists view the world.

I'll note that this computational lens is not just a set of prepared answers for podcasts or whatever else. I have met Joscha in person and he is as authentic to this ways of looking at the world as you're ever going to get (he is also a really cool all-around person).

I listen to Joscha Bach not necessarily because I fully agree with every view he has about how the world works. I listen to him because he embodies the computational lens as good as anyone will be able to do, and I have found this type of computational thinking to be immensely useful in both my work life (bioinformatics, running a business) and my personal life. I could go on [3], but you really should just listen to his podcasts with Lex Fridman, which I will link here: part 1, part 2, and part 3.

Computational thinking and your latticework of mental models

Putting it all together, I wanted to zoom out and note that the computational lens is a powerful lens from which you can view the world and solve important problems. But it is one lens of many. One person who understood this deeply was the late Charlie Munger, the right hand man of Warren Buffett. He saw and acted in the world in terms of a latticework of mental models. He would look at a problem through the lens of an ecomonist and see the network of incentives at play, and then through the lens of a biologist and see the natural selection at play, and so on. We note that Munger's success as Buffett's right-hand man (for 60 years), and the billions of dollars they made accordingly, is a testament to this kind of thinking. Munger says:

Have a full kit of tools…go through them in your mind checklist-style…you can never make any explanation that can be made in a more fundamental way in any other way than the most fundamental way. And you always take with full attribution to the most fundamental ideas that you are required to use. When you’re using physics, you say you’re using physics. When you’re using biology, you say you’re using biology.

Accordingly, I am not arguing that computational thinking is the be-all and end-all, from which you will understand the universe and be able to solve every problem. Rather, I am arguing that it is a critically important set of mental models to add to your latticework, especially in a digital age that is increasingly run by bits and code.

All this being said, the actionable advice I would give is to gain a basic understanding of computer science, even if AI automates the whole thing. It doesn't take very long to learn how to think computationally. Fun programming games like Karel the Robot can help you internalize these concepts by virtue of simply doing the work. An intro course on python will teach you the basic data structures, algorithms and concepts that I still use today. Writing a couple of scripts that do things you care about will put the knowledge in practice, and you'll see what I mean about the intensive practice of the scientific method.

It is my hope that everyone reading this article, ADHD dignosis or not, gets to learn and practice these principles, even if its a few days playing with Karel the Robot before moving on to the next thing on your TODO list. Computational thinking is largely learn-by-doing, so I would recommend that if you don't have very much time, then you should simply go through a couple of Karel exercises, rather than reading Wikipedia articles on each of these concepts.

And from there, you can add computational thinking to your latticework of mental models. It is my hope that learning to code will also improve your thinking, as it improved mine.

Relevant links:

How I transitioned from biologist to biology-leveraged bioinformatician: While the current article is a bit more theoretical, this article here is more practical. It discusses the use of Karel the Robot as a way to teach a lot of computational thinking, concepts like problem decomposition, and single-cell data.

Footnotes:

[1]

While recursion is a key theme in the book, Hofstadter complains that a lot of people didn't understand that the crux of this book was actually about cognitive science and the nature of intelligence, which prompted him to write subsequent books that were more direct. However, a huge chunk of the book, more than half of it in my recollection, is about recursion and self reference, in the context of Gödel's Incompleteness Theorem. This is actually what I took from the book, much more than what Hofstadter's ideas about the nature of intelligence were. Interestingly enough, a lot of Hofstadter's ideas in regard to intelligence have been proven wrong by current advancements in AI. This in turn prompted Hofstadter to admit in writing that he was wrong about a lot of his ideas, and he is worried about how AI is developing. From the linked interview:

I never imagined that computers would rival, let alone surpass, human intelligence. And in principle, I thought they could rival human intelligence. I didn't see any reason that they couldn't. But it seemed to me like it was a goal that was so far away, I wasn't worried about it. But when certain systems started appearing, maybe 20 years ago, they gave me pause. And then this started happening at an accelerating pace, where unreachable goals and things that computers shouldn't be able to do started toppling.

He goes on further to say:

And my whole intellectual edifice, my system of beliefs… It's a very traumatic experience when some of your most core beliefs about the world start collapsing. And especially when you think that human beings are soon going to be eclipsed. It felt as if not only are my belief systems collapsing, but it feels as if the entire human race is going to be eclipsed and left in the dust soon. People ask me, "What do you mean by 'soon'?" And I don't know what I really mean. I don't have any way of knowing. But some part of me says 5 years, some part of me says 20 years, some part of me says, "I don't know, I have no idea." But the progress, the accelerating progress, has been so unexpected, so completely caught me off guard, not only myself but many, many people, that there is a certain kind of terror of an oncoming tsunami that is going to catch all humanity off guard.

Why am I writing this lengthy footnote? Because in a nutshell, I still think you should read the book, because the book is about much more than now disproven views on the nature of intelligence. In an AI group chat recently, someone asked whether they should still read Gödel, Escher Bach despite a lot of his ideas on the nature of intelligence being outdated. In my reply, I explained that the book goes far beyond the nature of intelligence, and to me it's a joyful philosophical musing around the concept of self reference, recursion, and Gödel's Incompleteness Theorem. As for the rest of the title, he talks about these concepts in the concept of the artwork of MC Escher, and Johann Sebastian Bach (as opposed to Joscha Bach, who I also talk about in this article).

These intellectually stimulating musings are not directly involved with the nature of intelligence, and have not been shot down by current advancements in AI. Hofstadter does the best job I've come across talking about these concepts (and I haven't even gotten into the dialogues between Achilles, a turtle, and other characters that happen as interludes between chapters…you really need to read the book to see what I'm talking about). So yes, read this book.

[2]

For me, I find myself using igraph's R interface for basic graphical modeling. In the past few years, the concept of "knowledge graphs" have become more common. Accordingly, I use Neo4J (similar to a SQL database but for graph structures) and the Cypher query language accordingly. It's not the only way to do it, but it has worked for me in two paid long-term client engagements so far.

The applications for me have ranged between network modeling (eg. using random walkers), and building more complex schemas which have things like protein-protein interactions, protein-drug interactions, GWAS associations, and things of that nature all combined. This is what the "knowledge graph" is specialized in handling. An example of this relevant to biotechnology is called Hetionet, which is free and public, with an interface that allows you to explore it online, at least at the time of writing.

[3]

Ok, I'll go on. His thought process also involves taking the concepts within the computational lens and using them in a more general sense. This is the ethos of Charlie Munger's latticework of mental models, talked about in the final section of this article. As an example, in one of his tweets from April 27, 2024:

Artificial Intelligence is the only field that has developed formal concepts to define, analyze and naturalize mental states. All other academic disciplines purporting to study the mind tend to be ignorant or dismissive of this, even now that AI’s empirical predictions play out.

So you can see here that he's thinking about AI not necessarily as a tool to speed up his ability to do knowledge work or whatever else. He is interested in AI in terms of concepts it can give him to reason about the human mind.

He further points out, in another tweet on the same day that is a sort of anti-computatinalism that some people have, which is the opposite of the computational thinking I am advocating for here. That the act of refusing to think computationally is very limiting when it comes to reasoning about the human mind:

Most thinkers outside of AI and constructive mathematics find the idea that minds are self organizing virtualized computers confusing, unconvincing or insulting. The anti AI sentiment of the wordcel sphere is not just loss of ad revenue but often anti computationalism.

In short, Joscha Bach is an exemplar of what you can start to do if you look at the world through the computational lens. You can see that it can be a bit off-putting for some. But I think if you are one of those people who is off-put by the idea that the human mind is a self-organized virtualized computer, just remember that this is part of the latticework of mental models that I talk about in the last section. You can look at the mind through more "classical" lenses too, where it is a bit less cold and calculating.

To get the best understanding of what the mind is (or anything for that matter), you need to look at it through as many lenses as possible, hence the latticework of mental models. But to hammer the point home, I don't think the computational lens is nearly as utlized as it should be.